We previously examined smartphone features that are dependent on ambient Correlated Color Temperature (CCT) that are quickly becoming standard across flagship smartphones. In our initial article on CCT Adjustment Technology (CCT), we highlighted a key industry challenge: smartphones handle color rendering very differently depending on ambient light. This lack of consensus results in varied, and sometimes inconsistent, visual outputs across devices and environments.

Our observations led us to another question: “How do smartphones render the reality we see?”

In other words, to what extent do captured photos reflect the real-life scenes they were taken in? And how much of what users perceive comes not only from the camera, but also from the display?

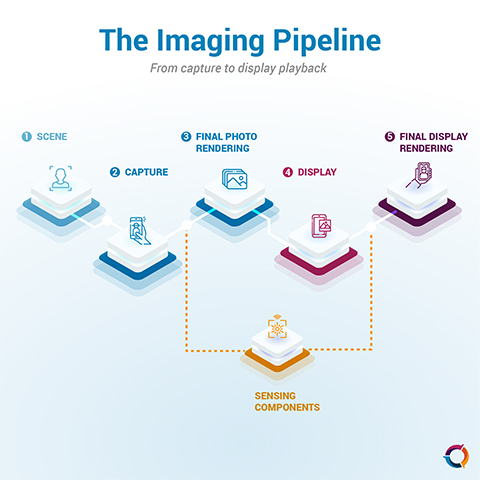

To explore these questions, we designed a set of experiments examining the so-called “glass-to-glass experience” which can be defined as the full imaging journey from capture through the camera lens and optics (glass) to rendering on the display panel (glass).

The aim of these experiments was to investigate how consistent the smartphone experience is from capture to display.

To do so, we put five flagship smartphones to the test: the iPhone 16 Pro Max, Samsung Galaxy S24 Ultra, Huawei Pura 70 Ultra, Vivo X200 Pro, and Honor Magic 7 Pro. Testing was conducted under both controlled laboratory conditions and real-world scenarios, allowing us to assess the entire imaging pipeline, from photo capture to on-device display rendering under real and ideal laboratory situations.

The experiment was divided into three parts:

-

- Camera performance: examining HDR photo capture fidelity to the real scene

- Display performance: analyzing how images are rendered on device screens

- Camera to display user experience: studying how a small group of participants perceive fidelity and preference directly on smartphones

Camera capture: a variety of renderings

In this first phase of our experiment, we focused on the analysis of HDR photo capture performance applying our standardized camera testing protocol (learn more here). These included assessments on an HDR monitor (an Apple Pro XDR Display) under calibrated reference lighting conditions to ensure perceptual accuracy.

Comparing simultaneously the captures on the same reference display, we detected meaningful differences in the accuracy of scene reproduction only imputable to the capture process. For scenes shot in low-light conditions in particular, several challenges emerged that could noticeably affect perceived image quality.

Visualizing the set of pictures on an HDR screen, we observed important issues on some of the pictures taken:

-

- Inconsistent white balance

- Unnatural skin tones

- In some cases, unbalanced exposure between subject and background

- And even, for a few flagships a lack of HDR support impacting the rendering

These shortcomings varied across the devices tested, (Apple iPhone 16 Pro Max, Huawei P70 Ultra, Honor Magic 7 Pro, Samsung Galaxy S24 Ultra, and Vivo X200 Pro) highlighting the diverse approaches OEMs take in HDR imaging.

Yet the story does not end with camera capture. The way an image is displayed can amplify or mitigate these capture-related limitations, ultimately shaping user perception.

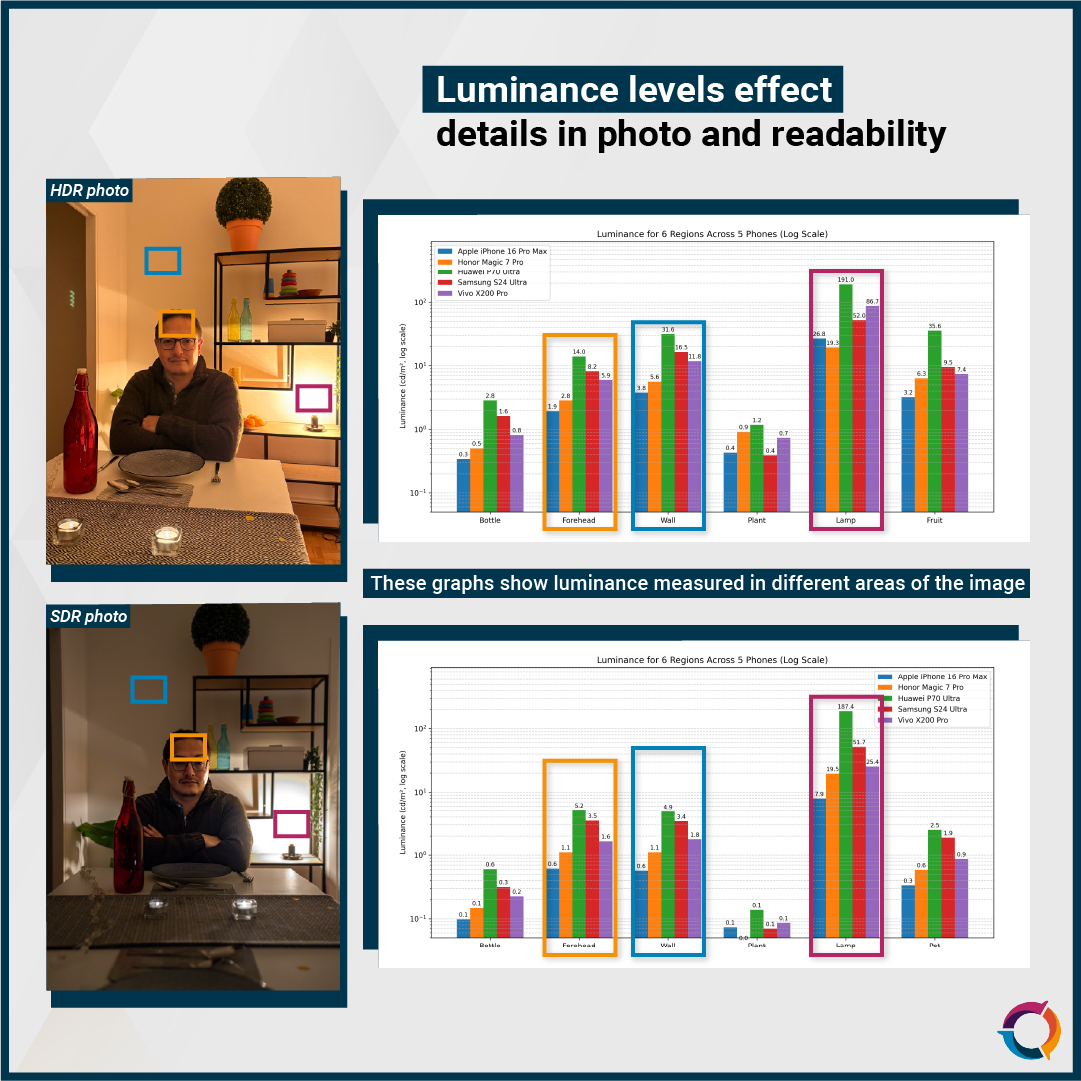

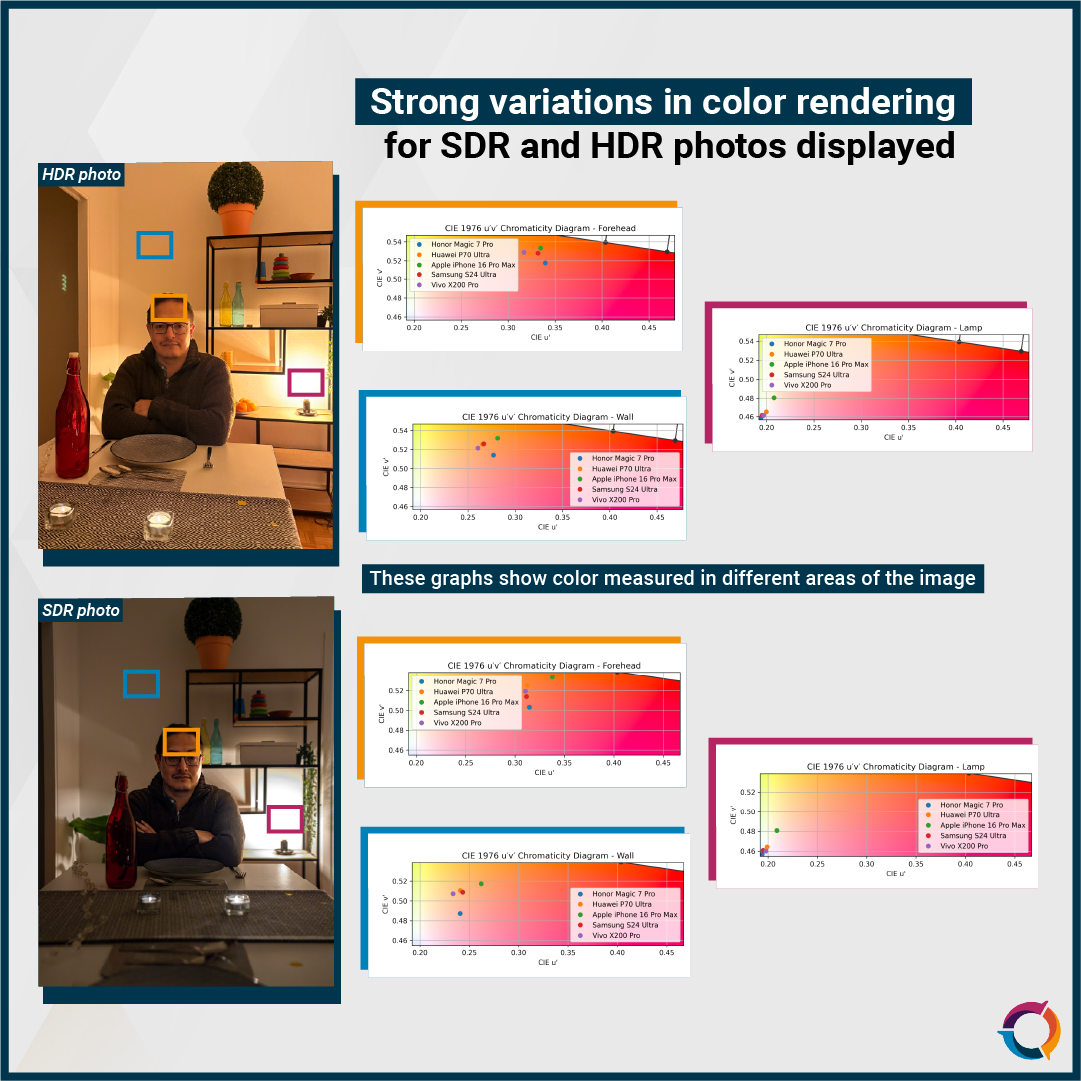

Display playback: disparities in luminance and color strategies

To assess rendering quality of the tested displays, we loaded two test images on the flagship devices:

-

- An SDR photo captured with a DSLR camera

- An HDR photo captured with a smartphone (converted into an HDR format that was compatible across all devices)

The goal was to evaluate the difference in rendering on each display for the same input content. Testing was conducted in a controlled lab environment under lighting conditions designed to replicate the original scenes. Detailed luminance and color measurements allowed us to analyze each device’s display behavior with precision.

These findings highlight clear differences in luminance tuning strategies. Brightness levels varied significantly, affecting both readability and overall clarity.

Noticeable shifts were observed across devices, and users are more sensitive to these changes than they might realize. Each OEM deployed its own ambient light adaptation method. Apple’s iPhones, for instance, dynamically adjusted white balance to match environmental conditions, often leaning towards warmer tones under warm low-light environments.

The key takeaway of this study is that display rendering extends beyond simple image reproduction. It is fundamentally about contextual adaptation. The result is not a direct mirror of reality, but a perceptual experience shaped by both device design and viewing conditions. As perception varies with the environment, the visual impression does as well, reinforcing the need for holistic testing approaches that consider the entire imaging pipeline.

Evaluating Smartphone Glass to Glass User Experience: From Reality to Preference

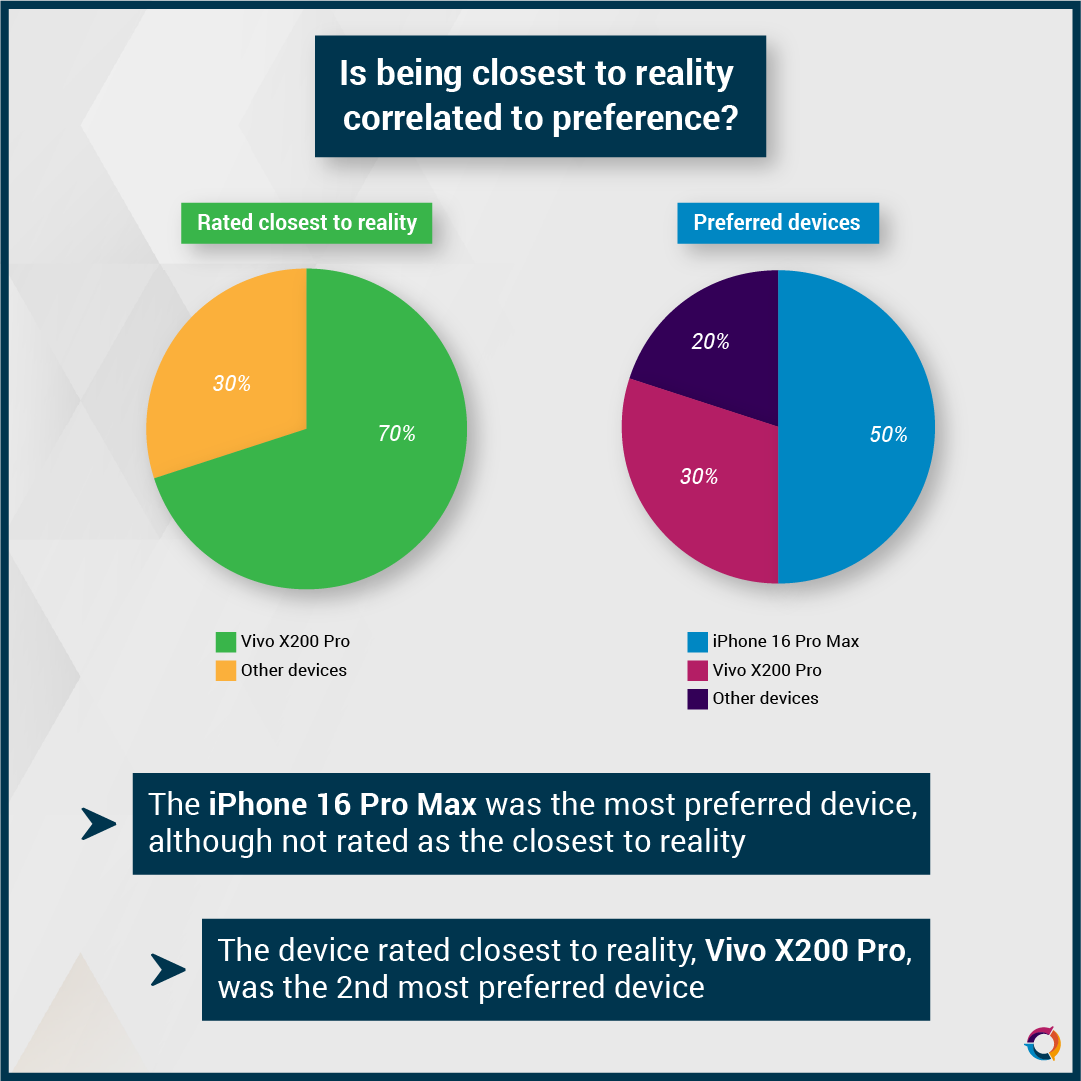

To better understand how users perceive smartphone camera performance, we conducted a focused study with a small group of European participants. The goal was to evaluate the perceived fidelity and preference of image renderings directly on smartphones, moment after the capture. The experiment took place in-situ, meaning that participants evaluated photos directly in the same environment where they were taken.

Two complementary evaluation methods were used.

-

- First, each participant provided an individual assessment by answering: “How close is the rendering to reality?” on a 1–5 scale.

- Second, a side-by-side comparison was conducted, where participants selected the rendering that looked closest to reality and the one furthest from reality.

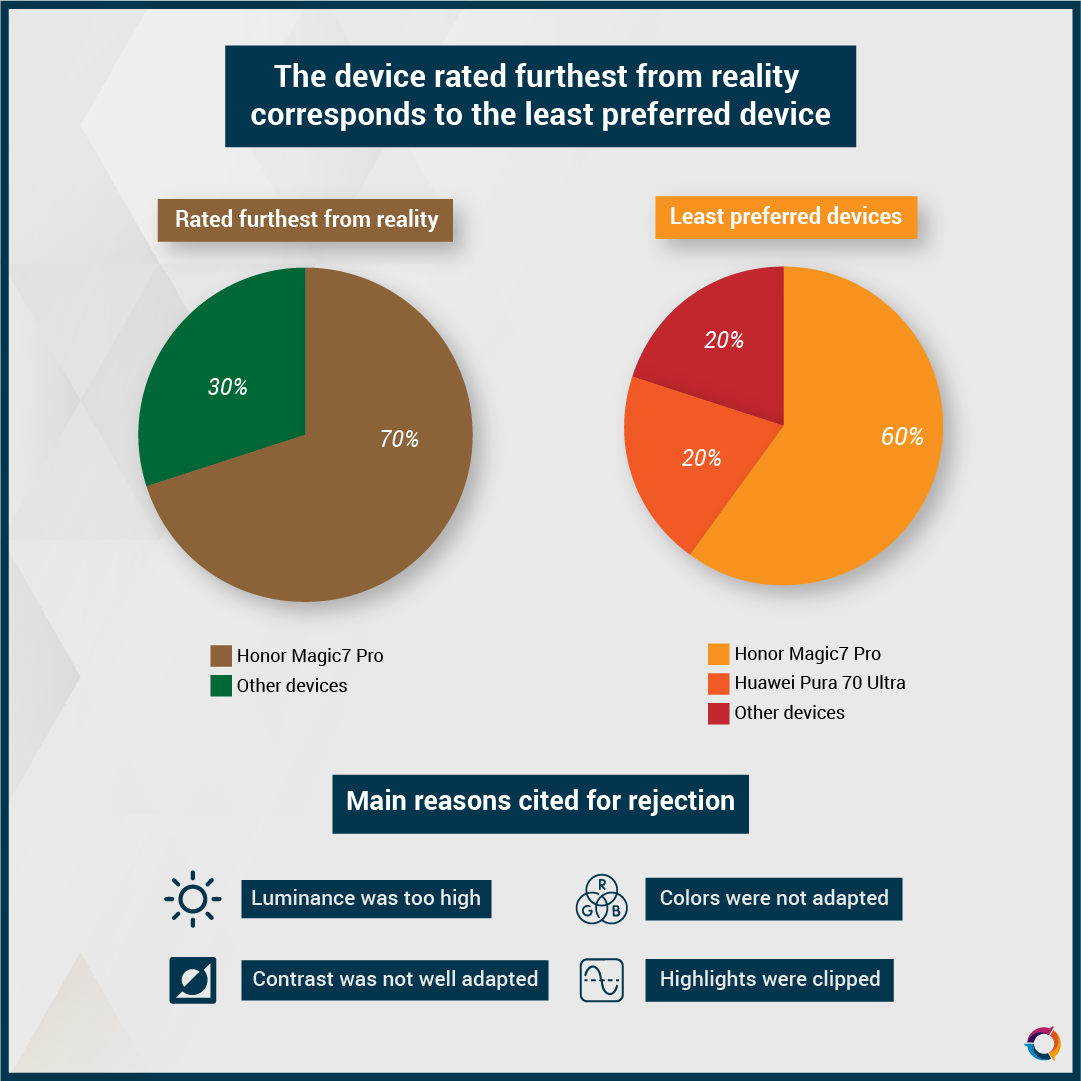

The results showed a nuanced picture. In the individual evaluation: The Vivo X200 Pro, the iPhone 16 Pro Max and Huawei P70 Ultra were rated as close to reality overall. In contrast, the Honor Magic7 Pro was consistently considered the least faithful both individually and in side-by-side comparisons.

To extend our understanding beyond fidelity, the experiment was repeated with a new focus: user preference. Participants were asked: “How do you like the rendering?” (1–5 scale) and in side-by-side mode, they were instructed to select their most preferred and least preferred rendering.

Interestingly, the findings diverged from the fidelity-focused evaluation. The iPhone 16 Pro Max emerged as the most preferred device in side-by-side comparisons, followed by the Vivo X200 Pro. While the Vivo had previously been considered the most accurate, the iPhone was slightly more appreciated by participants overall. Once again, Honor Magic 7 Pro ranked last, being both the least preferred and the least faithful to reality.

These two complementary rounds of evaluation revealed two key insights.

-

- A perceived faithful reproduction of reality is not necessarily the most preferred rendering. Subtle image processing choices, such as contrast or color enhancement, may influence preference even if they deviate from real-life perception.

- There is a clear correlation between the least faithful and the least preferred rendering, as seen with Honor Magic 7 Pro.

Taken together, these insights illustrate that user experience in smartphone photography sits at the intersection of accuracy and appeal, underlining the importance of balancing fidelity and enhancement in imaging pipeline design comprising camera and display. Further studies are currently being conducted to better understand the preferences.

What does it take to get a good Glass to Glass Experience?

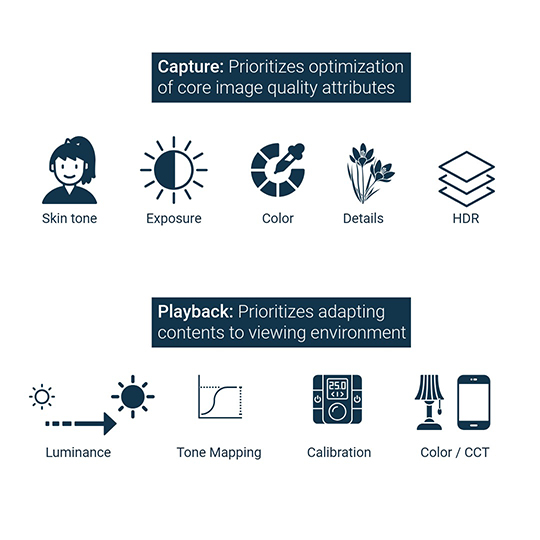

Delivering a unique camera-to-display experience on a smartphone requires the interplay of several critical components working in harmony.

At the foundation is the ambient light sensor (ALS), a small but essential element that constantly measures the surrounding lighting conditions. Manufacturers such as ams OSRAM, a leader in optical sensing technologies, produce advanced ALS solutions that enable this precise environmental awareness. Spectral ALS variants, in particular, enable precise measurements of chromaticity and illuminance (lux), allowing devices to adapt image capture and display playback to the ambient environment. These sensors are typically integrated on both the camera and display sides of the device.

The camera then takes on the task of capturing photos and videos, but the raw image is only the starting point. Through sophisticated image processing, each manufacturer applies its own stylistic choices, enhancing details, balancing exposure, and adjusting color reproduction to create a signature visual identity.

Once the content is captured, the display becomes the final stage of the pipeline, responsible for rendering the image or video back to the user. Here again, adaptation plays a crucial role, as the display fine-tunes brightness, contrast, and color balance to match the ambient light, striving to maintain both clarity and comfort across diverse conditions, from dim interiors to bright outdoor sunlight.

What ultimately defines the user experience, however, is not just the individual performance of these components but the way they are tuned to work together. The subtle choices made in calibration and tuning can elevate the experience, making visuals feel vivid and lifelike, or conversely undermine it, leaving them flat or unrealistic. This fine balance makes tuning a decisive factor in shaping user perception.

Conclusion

Ultimately, our findings highlight the importance of evaluating the entire imaging pipeline as a whole, from ambient light sensing to camera capture and display rendering, because this is how users actually experience their smartphones. Testing components in isolation cannot fully explain how fidelity and preference interact once everything comes together in the user’s hands.

Beyond the technical aspects, many studies also underline that preferences are not universal: they are deeply rooted in culture, habits, and regional aesthetics. A rendering style that appeals to European users may not resonate the same way in Asia or North America. This is why local evaluations are critical, ensuring that tuning strategies are adapted to specific user groups.

By embracing both technical accuracy and cultural sensitivity, manufacturers can better align their devices with real-world user perception and deliver experiences that feel both authentic and engaging.

In addition, as much as smartphones today are recognized for their distinctive “camera signatures”, reflecting each manufacturer’s unique approach to exposure, color balance, and tone, we may now be entering an era of the “display signature.” Beyond capture, the way a device presents content on screen can be just as defining. Some smartphones favor darker brightness adaptation in low light, giving a more cinematic, subdued experience, while others embrace highly vivid color rendering, creating instantly recognizable visuals across their product lines. Ultimately, crafting a meaningful display signature requires a deep understanding of the visual scene and context, enabling the device to apply the most relevant settings and parameters to deliver a consistent, intentional viewing experience, one that becomes as characteristic as its camera output.