To keep up with the rapid pace of innovation in smartphone cameras, we have overhauled and expanded our DxOMark Mobile suite of image quality tests. The most obvious change is our addition of an extensive set of tests to measure Zoom performance and Bokeh quality. These two new sub-scores join our existing Photo sub-scores to create the new overall Photo score. But that is far from the only change. We have also expanded our indoor and outdoor test suites to include low-light testing, effects of subject motion, and more exacting high-dynamic range tests. Overall, we now capture over 1500 still images and two hours of video for each device we test.

We have retested several of the top-scoring phones using our new test protocol, with some interesting results that help illustrate how the new DxOMark Mobile’s scope has been expanded to cover some of the recent innovations and features and be more relevant. You can get a sense of some of the changes by looking at this chart of old versus new scores for some of the leading smartphones. For example, our new test suite that measures Zoom and Bokeh helps set the dual-camera design of the iPhone 7 Plus apart from the single-lens iPhone 7. Some of the other camera scores have also changed significantly based on how they deal with low-light, motion, and HDR – three other capabilities we now test more extensively.

Highlights of our new DxOMark Mobile Score include:

- All new Zoom sub-score based on extensive testing at multiple focal lengths

- All new Bokeh sub-score using a scene designed to allow comparisons

- Low-light testing down to 1 Lux

- Additional high dynamic range testing

- Motion added to the test suite to more accurately evaluate camera performance in real-world situations

- We capture and analyze over 1500 images and two hours of video using expanded lab and outdoor test scenes for each device we test with the new protocol.

Our new Zoom sub-score

Until recently, almost without exception, zooming on a smartphone has meant a simple digital scaling of the initial wide-angle image. With the advent of dual-camera designs featuring a telephoto lens, many smartphones can now take advantage of true optical zoom, or even some form of hybrid zoom that combines images from multiple cameras. As smartphones increasingly replace traditional cameras, users also expect them to meet all their photographic needs – including the ability to zoom in on subjects without losing image quality. So our new DxOMark Mobile includes a Zoom sub-score that evaluates a camera’s performance at a variety of distances from a fixed-size subject. Having a second camera with a telephoto lens can be advantageous, but design trade-offs and software challenges mean that real-world performance varies greatly among devices.

It’ll be specifically interesting to compare the zoom performance of recent optical or hybrid zoom cameras with older large-sensor high-resolution cameras, for example the Nokia 808, that were also designed for better zoom performance.

Simulated Depth Effects and Bokeh provide an artistic flair to smartphone photographs

As with Zoom, having a subject that pops from the background has until recently required a standalone camera with a large sensor. Now, though, many smartphones come with a computational bokeh effect, using some combination of either or both dual cameras and computational imaging to create a Depth Effect, and to shape the appearance of out-of-focus bright spots to simulate the Bokeh (blurred background) that is typically associated with a wide-open lens on a high-end camera. Using purpose-built indoor test scenes, and carefully-designed outdoor test scenes, we now evaluate smartphone cameras on how well they are able to provide a smooth Depth Effect, as well as on the quality of their simulated Bokeh. In addition, longer focal lengths produce less subject deformation than wide-angle shots – especially noticeable with portraits – so we judge each camera on how well it is able to accurately render the proportions of a subject’s face.

You can see from this comparison that when activating Portrait mode the iPhone 7 Plus is able to blur the background, creating a more artistic look for the image.

Added low-light tests reflect how consumers are using their smartphones

Consumers are also now expecting their phones to capture images in very-low-light conditions, so we are testing smartphone cameras in lighting down to 1 Lux (essentially candlelight). This exposes differences in performance between phones that otherwise might rank very similarly. For example, the Apple iPhone 7 does a better job of calculating exposure in very low light than the Samsung Galaxy S6 Edge – even though in more typical lighting conditions they score very similarly:

The S6 Edge has exposure problems in very low light. It also uses a long shutter time to reduce noise, which in turn can cause motion artifacts. Both of these issues contributed to its relatively lower score when tested with our new protocols.

Capturing action means needing to test for Subject Motion

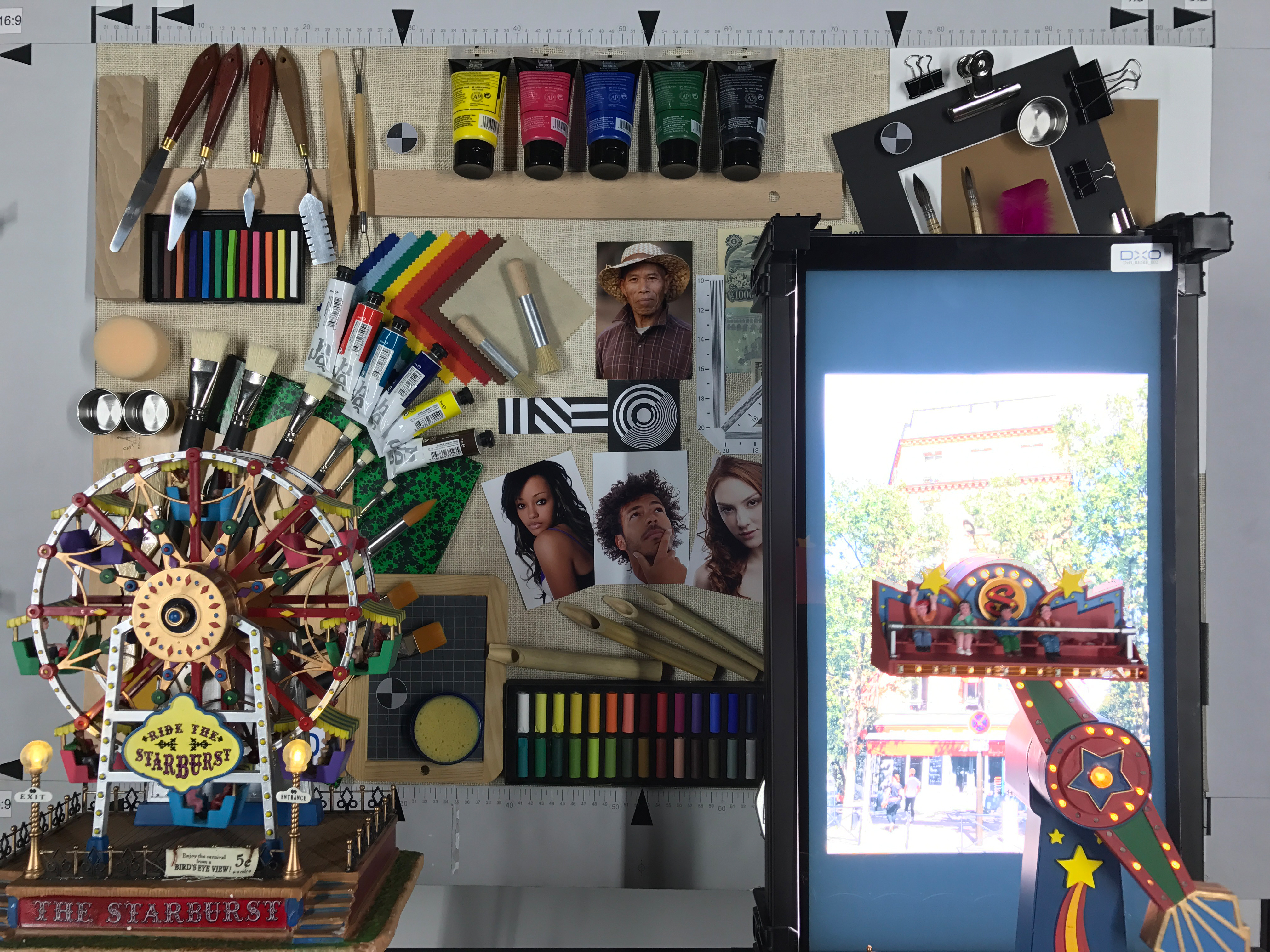

As smartphones are used in more and more action situations, it is important to evaluate how well they handle subject motion. Motion artifacts can be caused by long shutter times or by problems with multi-frame image processing algorithms. To evaluate performance in those conditions, we have added tests that compare low-light performance while mounted on a tripod and when hand-held. This allows us to directly compare detail preservation, noise level, and other aspects of the resulting images, such as with these cropped portions of low-light test images:

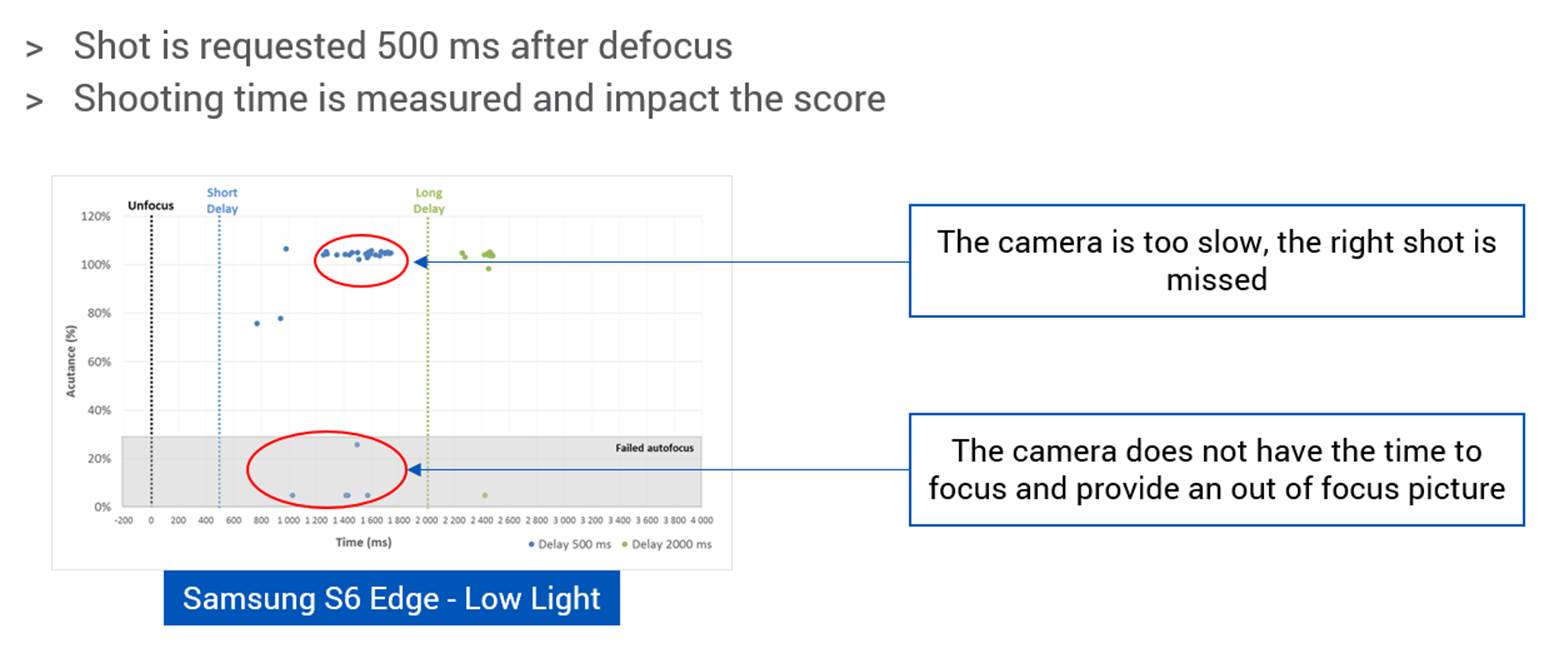

We have also added timing to our Autofocus testing to better understand whether a camera can react quickly enough to accurately focus on a scene — something that is especially important when photographing sports or even family scenes with kids running around. Here you can see that in low light, the Samsung S6 Edge is often slow to focus:

Expanded test scenes push boundaries for Dynamic Range

Many smartphone cameras automatically capture and combine multiple frames to create a single still image, thus enabling them to accurately record scenes with much higher dynamic range. To help measure this and other camera improvements, we have added new challenging indoor and outdoor test scenes. In this challenging scene shot from under a bridge, you can see that the Samsung S6 Edge is unable to keep detail in the sunlit portion of the scene, while the Apple iPhone 7 provides some detail, although not much color. Only the Google Pixel is able to render the blue color accurately.

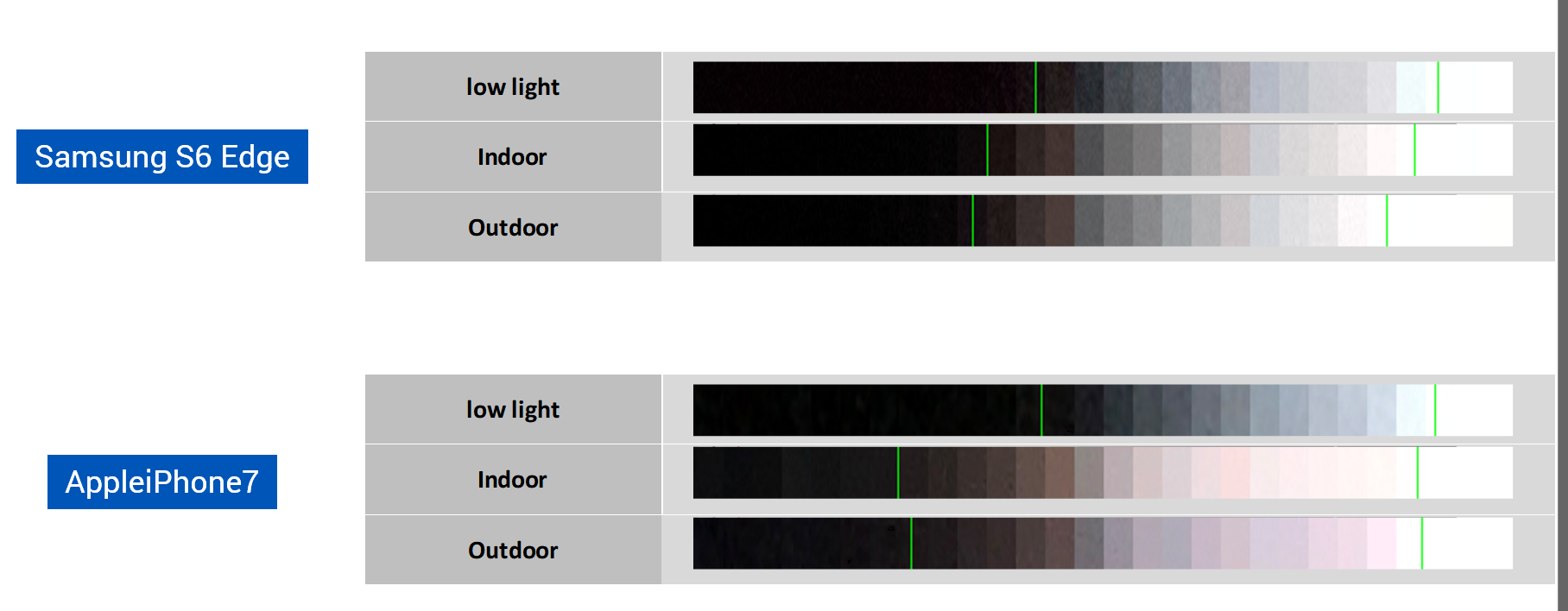

Similarly, you can see the results of our new indoor dynamic range tests, such as the following comparison of the iPhone 7 Plus with the S6 Edge:

The green vertical lines in this chart show the brightest and darkest areas that each camera can discriminate under various lighting conditions. You can easily see that the iPhone 7 does a better job of rendering tonal values in the shadows than the S6 Edge under typical indoor and outdoor lighting conditions.

Evaluating HDR (High Dynamic Range) performance

We have added tests on natural scenes that evaluate how well a camera can automatically handle and provide a quality image in high-contrast situations. For example, our “garden arch” scene features dynamic range beyond what any current smartphone sensor can capture in a single frame, illustrating the relative advantage of having multiple frames automatically combined. Here you can see that the Apple iPhone 7 Plus and the HTC U11 are able to outperform the Nokia 808’s larger sensor by using multiple images:

Testing low-light Video performance

Just as with still images, low-light performance is of increasing performance to those using smartphones for Video. To respond to this need, our new DxOMark Mobile Video test includes lighting conditions down to 1 Lux (essentially candlelight). These tests show performance differences between phones that otherwise have similar performance characteristics. For example, this chart illustrates that in low light the iPhone 7 Plus under-exposes scenes, while the Google Pixel does a much better job:

Upgrades to Video Color, Texture, Noise, and Stabilization tests

In addition to adding low light conditions to our Video tests, we have enhanced our tests for Video Color, Texture, Noise, and Stabilization. Our Video Color tests now include all of the tests we use for our still image evaluation, including the same method of more accurately scoring pleasing color renderings by using a combination of objective and perceptual evaluation. An upgraded version of Video Texture analysis now includes an objective set of tests to complement our perceptual tests, in a range of lighting conditions especially focusing on indoor lighting levels. Our Video Noise tests have been greatly expanded and do an improved job of evaluating both spatial and temporal noise. In addition, our Video Stabilization tests are also more challenging with our new protocol, and include evaluating artifacts.

Our new DxOMark Mobile tests do an improved job of differentiating between top performers

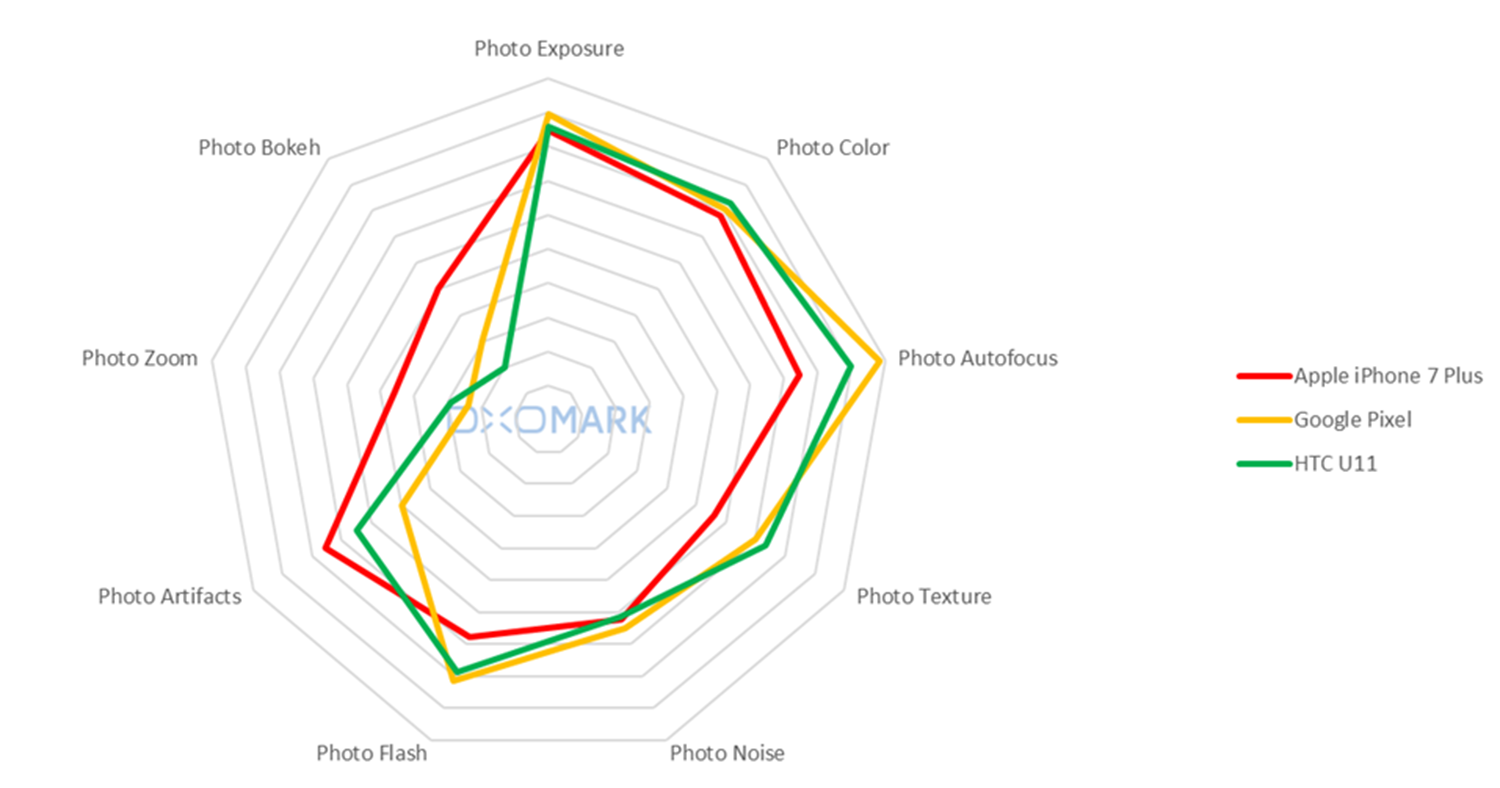

While the same phones that came out on top in our old rankings are still at the top as we’ve retested them with our new test protocol, it is now even easier to understand their relative strengths and weaknesses, as you can see from this chart of several retested flagship phones:

Our new test protocol shows that even though they have similar overall scores, even the best phones have different strengths and weaknesses. For example, our new tests make it clear that the Pixel and U11 can in some situations capture better image detail in default mode, while the iPhone 7 Plus is better for Zoom and Portrait photography.

We have only seen the beginning of how computational imaging coupled with multi-camera and multi-frame capture will improve the utility of smartphone cameras for an increasing number of challenging situations. Our new DxOMark Mobile test protocols will enable us to evaluate these advanced capabilities, and help us to continue to be the leading source of independent image quality test results for the press, industry, and consumers.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.