New article coming soon

Last update: November 4, 2019

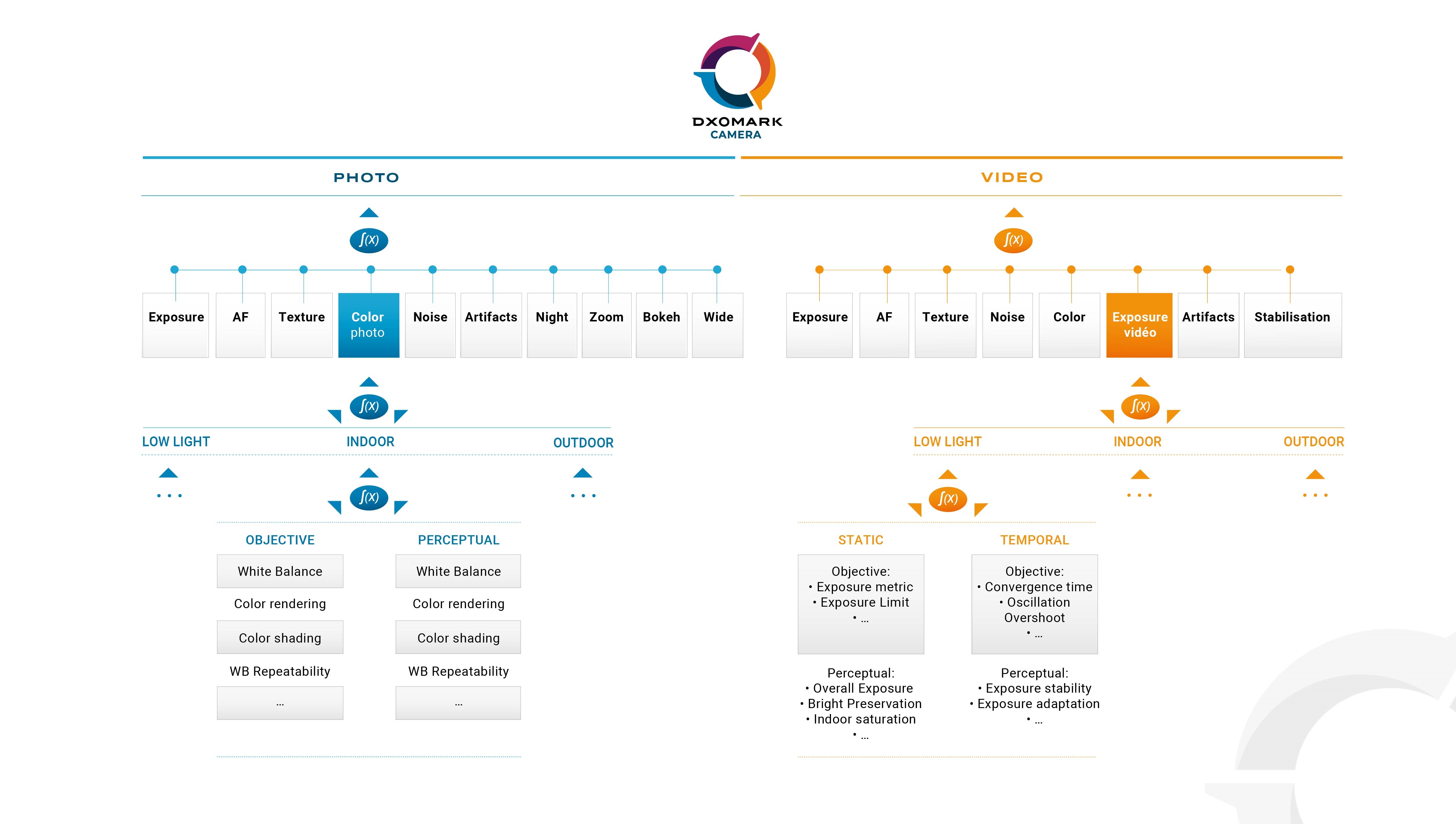

DXOMARK’s Camera (previously called DxOMark Mobile) scores and rankings are based on testing every camera using an identical, extensive process that includes shooting nearly 1,500 images and dozens of clips totaling more than two hours of video. The tests take place in both lab and real-world situations, using a wide variety of subjects. Our scores rely on purely objective tests for which the results are calculated directly by the test equipment, and on perceptual tests for which we use a sophisticated set of metrics to allow a panel of image experts to compare various aspects of image quality that require human judgment. Testing a single mobile device involves a team of several people for a week. Once we have tested the device, we score the Photo and Video quality separately, and then combine them into an Overall score for quick comparison among the cameras housed in different devices.

We test every device in exactly the same way, in identically-configured lab setups, using the same test procedures, the same scenes, the same types of image crop rankings, and the same software. This means DXOMARK results are both reliable and repeatable.

DXOMARK smartphone camera reviews, which include scores, sample images, and analyses, are fundamentally different from most camera reviews. Instead of being driven by a reviewer’s personal experience with and feedback about a camera, they are driven by the scores and analyses that come out of our extensive tests. We get a lot of questions about our scores, as well as about our testing and review process, so we’re sharing with you what goes into a DXOMARK Camera (previously DxOMark Mobile) score, as well as the process we use for testing and for writing up the subsequent review.

DXOMARK Overall score

The most frequently-cited score for a mobile device camera is the Overall score. It is created when we map the dozen or so sub-scores into a number that gives a sense of the device’s total image quality performance. An overall score is important, since we need to provide some way to rank results and have a simple answer for those not wishing to investigate further.

We weight the various results in a way that most closely matches their importance in real-world applications as judged by mainstream users — people typically interested in capturing family memories or sports — and by those who care about image quality above all else (whom we refer to as photo enthusiasts). We work with many different types of smartphone photographers to ensure that both the DXOMARK Camera (previously called DxOMark Mobile) Overall score and its sub-scores reflect what is important to them in their photography. Following from this, we test results — finished images — and not technologies. So, for example, autofocus and bokeh performance are tested independent of the method a particular camera uses to accomplish them.

This means that a device with very good overall scores may receive a higher rating than one which has a few excellent strengths and some noticeable weaknesses. However, every user is different, and each has differing priorities. For that reason, we encourage anyone reading our reviews to dig in past the Overall score into the sub-scores and written analysis.

We also get asked how a device’s Overall score can be higher than its sub-scores. The Overall score is not a weighted sum of the sub-scores. It is a proprietary and confidential mapping of sub-scores into a combined score. The Overall score is also not capped at 100. That just happens to be where some of the best devices are currently.

Photo and Video sub-scores

To help evaluate how well a smartphone camera will perform in specific use cases, we provide sub-scores for both Photo and Video, covering Exposure and Contrast, Color, Autofocus, Texture, Noise, Artifacts, Flash, and Stabilization (for Video). In 2017, we added Photo sub-scores for Zoom and Bokeh to reflect the advanced capabilities of many current mobile devices.

Which sub-scores are most important to you will depend on the types of photography you do with your mobile device. We compute sub-scores from the detailed results of tests that we perform under a variety of lighting conditions, and which include both scientifically-designed lab scenes, and carefully-planned indoor and outdoor scenes.

Sub-score categories

For each of our sub-score categories, we’ll explain what we are testing, something about the tools and techniques we use to test it, and some interesting details relevant to the testing process. In many cases, you can learn more about some of the hardware and software we use by looking at our Analyzer product website.

Exposure and Contrast

Exposure measures how well the camera properly adjusts to and captures the brightness of the subject and the background. Dynamic range is the ability of the camera to capture detail from the brightest to the darkest portions of a scene.

Conditions & challenges: This sub-score matters most to those who shoot in difficult lighting conditions (such as backlit scenes), or in conditions with a large variation between light and dark areas (for example, sunlight and shade). Overall, it is the most important sub-score, as it affects all types of photography.

Details: We assess and measure target exposure and contrast under varying light levels and types of lighting. We also calculate the maximum contrast values for each image. We conduct similar tests using carefully-designed indoor and outdoor scenes.

Color

The Color sub-score is a measure of how accurately the camera reproduces color under a variety of lighting conditions, as well as how pleasing its color rendering is to viewers.

Conditions & challenges: As with exposure, good color is important to nearly everyone. In particular, landscape and travel photographs depend on pleasing renderings of scenes and scenery. Pictures of people benefit greatly from good skin tones, too.

We measure color rendering by shooting a combination of industry-standard color charts, carefully-calibrated custom lab scenes, and outdoor scenes under a variety of conditions. A closed-loop system controls the lighting to ensure accurate light levels and color temperatures.

Details: As with Exposure and Contrast, we test Color using a variety of light levels and types of light, but this time with the purpose of measuring how well and how repeatably the camera calculates white balance, and then how accurately it renders colors in the scene. We also measure uniformity of color. We do not penalize cameras for slight differences in color rendering that are deliberately introduced to create a particular look desired by the manufacturer. For example, some cameras maintain slightly warm colors to convey the atmosphere created by low tungsten light.

Autofocus

The Autofocus sub-score measures how quickly and accurately the camera can focus on a subject in varying lighting conditions.

Conditions & challenges: Anyone who photographs action, whether it is children playing or a sporting event, knows that it can be difficult to get the subject in focus in time to capture the image you want. We measure a camera’s autofocus accuracy and speed performance in a variety of lighting conditions. The importance of this sub-score is directly related to how much activity there is in the photographs you tend to take.

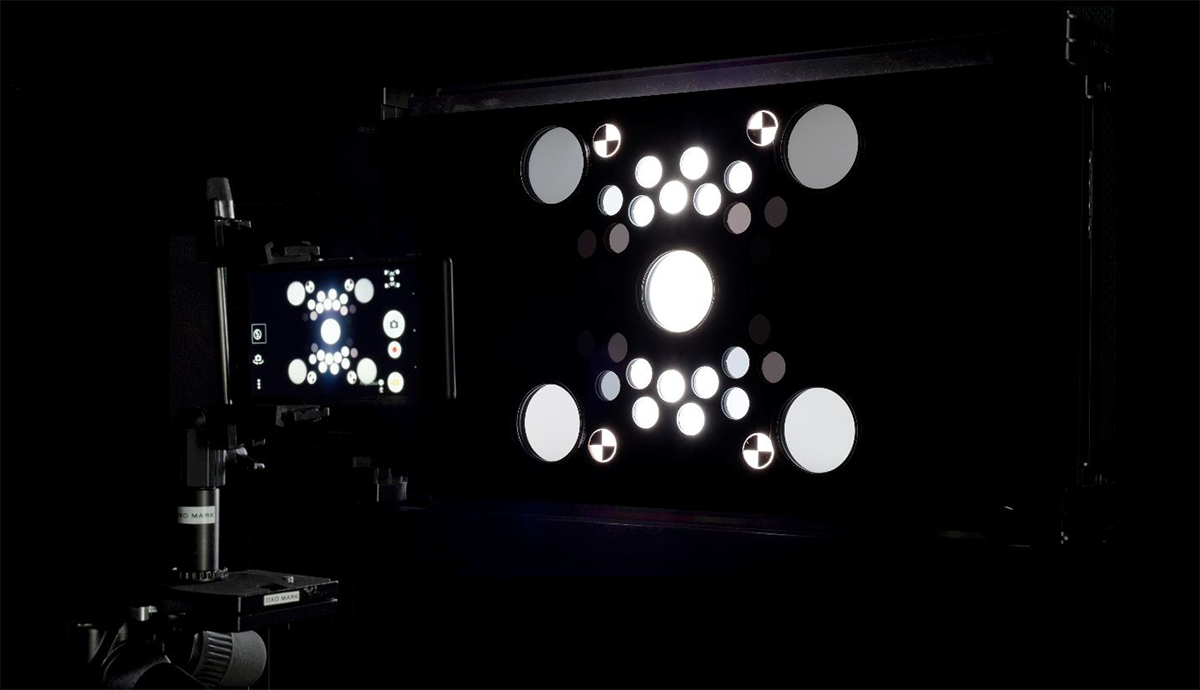

Details: When measuring autofocus performance, the camera needs to be defocused before each shot, and then the exact interval between when the shutter is pressed to when the image is captured has to be recorded accurately, along with the exact shutter time. In addition to our custom LED timer, we have created a system that uses an artificial shutter trigger and multiple beams of light to ensure the accuracy of our autofocus measurements.

Texture and Noise

The Texture sub-score measures how well the camera can preserve small details, such as those found on object surfaces. This has become particularly important as camera vendors have introduced noise reduction techniques (for example, longer shutter times and post-processing) that can have the side effect of decreasing detail because of motion blur and softening.

Conditions & challenges: For many types of photography, especially casual shooting, preservation of tiny details is not important. But those who expect to make large prints from their photographs, or who are documenting art work, will appreciate a good Texture sub-score. Expansive outdoor scenes are also best captured when details are accurately preserved.

The Noise sub-score measures how much noise is present in an image. Noise can come from the light in the scene itself, or from the camera’s sensor and electronics.

Conditions & challenges: In low light, the amount of noise in an image increases rapidly. If you shoot a lot in the evening, or indoors, then finding a phone camera with a good score — meaning that it keeps noise to a minimum — is very important. If you shoot primarily outside in good light, it won’t matter as much.

Details: Texture and noise are two sides of the same coin. Image processing to remove noise also tends to decrease detail and smooth out texture in the image. That is one reason we test using a wide range of lighting conditions, from 10,000 Lux all the way down to 1 Lux. We also use custom-designed scenes that include moving objects in addition to industry-standard static charts, so that we can take note of any side effects of excessively-long shutter times or excessive image processing. DXOMARK’s texture charts include those that conform to various industry standards, including CPIQ (Camera Phone Image Quality standard). We carefully print and profile all charts before putting them to use.

Artifacts

The Artifacts sub-score measures how much a camera’s lens and digital processing introduces distortion or other flaws into images.

Conditions & challenges: While noise is primarily related to a camera’s sensor, artifacts are the distortions created because of its lens. These can range from straight lines looking curved, to strange multi-colored areas. In addition, lenses tend to be sharpest at the center and less sharp at the edges, which is also measured as part of this sub-score. A high artifact score (based on awarding points for keeping artifacts to a minimum) is most important for those who care about the overall artistic quality of their images.

Details: Motion in test scenes is essential when evaluating artifacts, as many modern smartphone cameras fuse multiple images or images from multiple cameras to create a final result. If not executed correctly, these techniques can result in visible and distracting artifacts in complex moving scenes.

Flash (Photo only)

The Flash sub-score measures the effectiveness of the built-in flash (if any) in accurately illuminating the subject.

Conditions & challenges: Smartphone flashes are only useful at very short distances, and even at short distances, they may not be able to fully illuminate a subject. They can also cause unpleasant color shifts in the scene, since the color of the flash LED may not be the same as the other light in the scene. This sub-score is most important to those who capture a lot of close-up indoor photos, such as those of gatherings of family and friends.

Details: Modern smartphone flashes have become very sophisticated, sometimes using multi-color LEDs to attempt to match the color of the flash to the color of the ambient light. Our tests measure how well the flash performs both as a standalone light source, and when it is used as a flash fill along with natural or other light sources. Our flash performance tests take into consideration how well colors are preserved, how accurate the white balance is, and the characteristics of light falloff towards the edges of the image.

Zoom (Photo only)

Until recently, the only zoom capability in smartphone cameras was either a digital zoom that simply upscaled the image, or a crop if the original image came from a very high-resolution sensor. Now, however, an increasing number of mobile devices feature multiple cameras with different focal lengths. This gives them the ability to perform some types of optical or blended zooming, which means improved image quality over simple digital resizing.

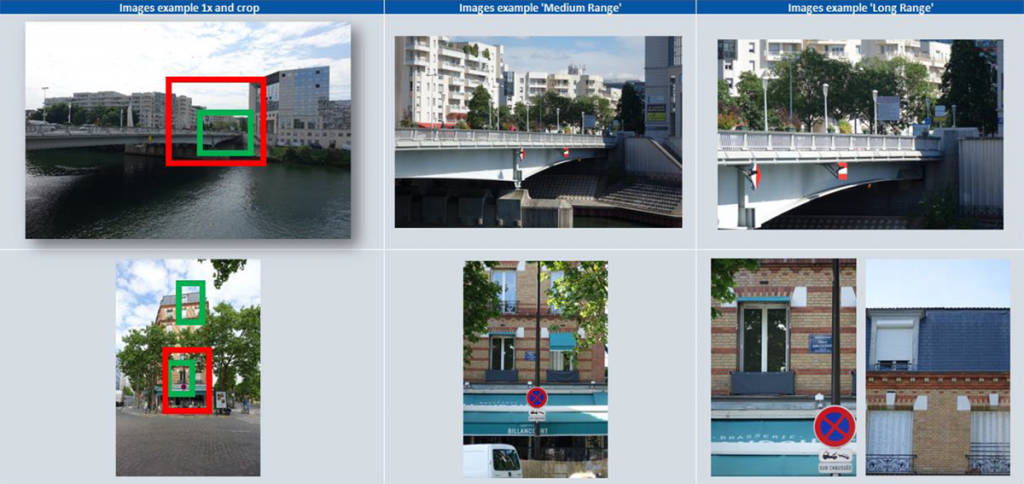

Our new DXOMARK Camera (previously called DxOMark Mobile) protocol now includes tests to evaluate image quality when zoomed in at magnifications ranging from about 2x to 10x.

Conditions & challenges: Zoom is very helpful for achieving a natural perspective in portrait photos, as well as for capturing events at a distance (including most sports). Similarly, travel photography often benefits from zooming in on distant landmarks.

Details: Since there are a variety of ways a camera can implement zoom (for example, using a telephoto lens, blending the images from two cameras, cropping a high-resolution sensor, and simple scaling), we test using a number of different focal lengths so as to highlight the strengths and weaknesses of each implementation. When the camera has a telephoto lens, it is often not as bright as the main lens, so some cameras switch to the main lens — even at their zoom setting — in low light, so we include not just outdoor and well-lit indoor scenes, but low-light tests as well.

Bokeh (Photo only)

Multiple cameras also permit depth estimation in mobile devices. This allows some of them to feature a “depth effect” or portrait mode that simulates the optical background blurring (bokeh) of traditional standalone cameras. (You can find a detailed description on how we test computational bokeh in this article.)

Our DxOMark Mobile protocol evaluates bokeh by judging how accurately blurring is applied, as well as the quality and smoothness of the blurring effect. We use the bokeh of a wide-open, professional-quality prime lens on a DSLR as a reference standard — for example, an 85mm f/1.8 lens on a full-frame DSLR (which is in turn similar to a 50mm f/1.8 lens on an APS-C format sensor).

Conditions & challenges: Bokeh is an important element of many artistic compositions favored by photo enthusiasts. It can also reinforce the impact of portrait photographs.

Details: Testing for bokeh required entirely new test scenes and tests. We not only need to measure how well cameras blur the background, but how well they deal with a variety of tricky situations, such when the subject is holding onto another object, which can make it hard for the camera to separate the subject from the background. Some cameras use face detection to blur everything in the image that isn’t a face, but that approach is quite error-prone, and often leads to an aesthetically displeasing result.

Stabilization (Video only)

The Stabilization sub-score measures how well the camera eliminates motions that occur while capturing video.

Conditions & challenges: Unless you mount your phone on a steady tripod when shooting video, it will wobble a bit – no matter how carefully you hold it. In addition, you may even be shooting from a moving vehicle such as a bus or boat. For these and other reasons, handheld video often appears shaky. To minimize that effect, many smartphones offer either electronic or optical image stabilization when capturing video. A higher score here means steadier and more pleasing videos.

Details: Cameras may include optical stabilization, electronic stabilization, or both. Each type has pros and cons depending on the nature of the camera motion. Our protocol enables us to imitate different kinds of motion, including the camera shake that can occur during handheld shots, thus enabling us to test how successfully the camera’s stabilization system can compensate.

If you’re interested in more information about our Analyzer testing solution, click here.

A guide to the sub-scores that may be most important to you

We’ve listed some of the most important use cases in the sections above for each sub-score, but for easy reference, here are the sub-scores that are most important for various types of smartphone photographers.

Travel and vacation photographers

Everyone likes to chronicle their adventures. Correct exposure is particularly important here, given the challenging high-contrast scenes typical of most outdoor locations. Color accuracy is also critical for creating pleasing images of landscapes and famous landmarks. Zoom matters for capturing landmarks or distant objects, as well as for capturing portraits of your traveling companions or locals.

Family memory-makers

In addition to vacation photos, family memories often require capturing events indoors, under poor or uneven lighting. Here, flash becomes critical, as well as low noise. Autofocus is key to capturing emotional moments, along with stabilization for on-the-fly videos of important events. Bokeh scores will help you determine how effectively your portraits will “pop.”

Recording sports

Action photography requires excellent autofocus, in addition to good exposure, for both still and video capture. Stabilization is also very helpful for video. Zoom is essential for most sports, as the action is often too far away to be captured with a mobile device’s default wide-angle lens.

Photo enthusiasts

While most photos never make it much past social media, photo enthusiasts often want to make larger versions of their photos as screensavers or prints. For them, in addition to the requirements for the type of image they are capturing, it’s important to have a camera with low noise and good texture preservation, as well as good contrast, and few artifacts. Excellent bokeh is also a key element for creating portraits or other artistic shots that highlight the subject.

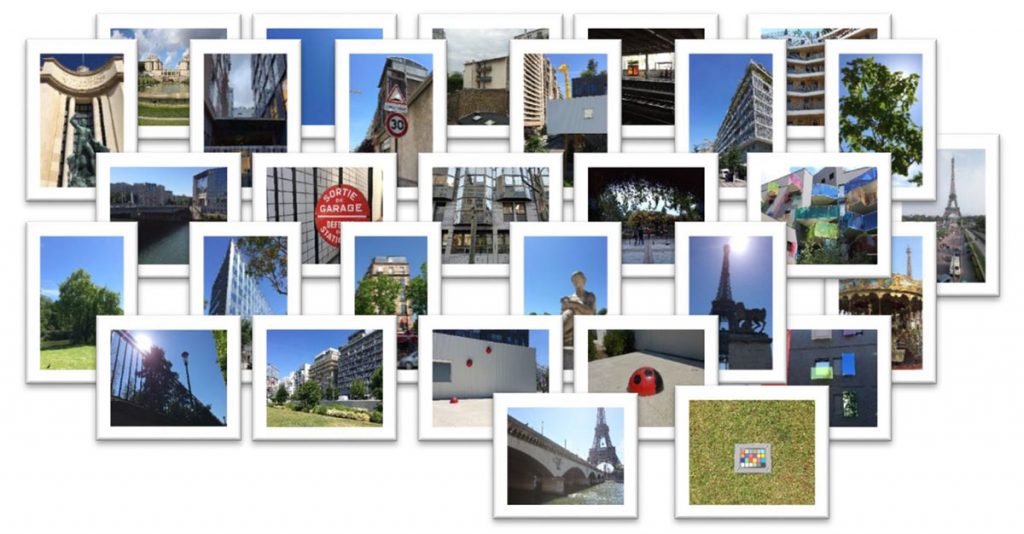

Each camera captures over 30 outdoor scenes several times each to measure repeatability. Each scene contains attributes that help us evaluate the perceived quality of the images for nearly every type of smartphone photography. We evaluate images using our unique perceptual scoring, which is based on our implementation of an image quality ruler methodology. Image quality rulers rely on the use of a set of known “anchor images” to which a test image is compared. This process is greatly enhanced by using experts to analyze the images and anchor images with content similar to the tested image. DXOMARK’s team of image experts and large library of existing test images from many devices make it possible to get consistent and accurate results using this process.

An additional 15 indoor scenes are designed to be particularly helpful in analyzing the suitability of the smartphone camera for family memory-makers and others working in artificial and low-light conditions. As with the outdoor scenes, we evaluate these indoor scenes using our perceptual methodology that employs image quality rulers.

DXOMARK Camera reviews (previously called DxOMark Mobile)

Our reviews are designed to provide you with both the scores and sub-scores for a device, along with analyses that may be useful in helping you compare multiple devices. We also often include comparison images that show how different cameras perform in the same situation, although in many cases those images are simply for illustration, and were not part of the actual tests. Similarly, we often include images to illustrate a particular point, or for you to evaluate, that may not have been used in testing. Since launching the new version of DxOMark Mobile, however — and this is important — we have been able to publish some of the full-resolution original images we used in testing so that you can make your own comparisons.

Frequently Asked Questions

What camera modes do you use for testing?

We generally do all testing using the default mode of the smartphone and using its default camera application. However, there are a few exceptions. Flash modes, zoom factor, and portrait (depth or bokeh) mode are selected manually when evaluating those capabilities. In video mode we use the resolution setting and frame rate that provides the best video quality. For example, a smartphone might offer a 4K video mode but use 1080p Full-HD by default. In these circumstances we will select 4K resolution manually.

Aside from the difficulty in testing an arbitrary number of camera apps and modes, this approach reflects the experience of the vast majority of smartphone photographers, who use their devices in the default setting, and also of the phone vendor, who presumably makes an effort to have the default setting present the camera at its best.

Do you review pre-production cameras?

All our published mobile device reviews are written based on tests performed using commercially-available devices with current production firmware, the same as a retail buyer would receive.

Why are some devices tested more quickly than others?

First, we need to be able to acquire a production unit and have time to test it. Also, we do have to prioritize our efforts, given the large amount of time and resources each test and review require, so devices with a large market impact tend to be tested more quickly after launch.

How much time does it take to test and review a mobile device camera?

The test process involves a team of engineers for about ten days, capturing photos and video both in the lab and outdoors, analyzing data, and providing perceptual evaluations. Then our writers need additional time to create a review from the test results and to publish it along with sample and comparison images.

What are you going to do now that devices are already getting scores of 100?

Our scores are not capped at 100. We’ve already had a couple devices score over 100 in certain categories.

How come the Overall score is sometimes higher than the sub-scores?

The Overall score is not a weighted average of sub-scores, it is a mapping based on the sub-scores.

Why does some of the sample image metadata say that the image was created on a computer?

Smartphone vendors have started experimenting with additional options for rendering richer color and wider tonal ranges. In particular, some are using the new HEIF image format instead of JPEG, and the DCI-P3 colorspace instead of sRGB. In the case of HEIF, we need to convert the images to JPEG for you to see them in your browser. We do not do that with the images used in testing, so this conversion only affects the copies of the images we upload for your reference. Similarly, we need to convert DCI-P3 images to sRGB for them to look good on many consumer monitors, but this doesn’t impact the tests nor the test results, only the image versions we provide with the reviews.

Why should zoom and bokeh affect the Overall score?

As smartphone buyers come to expect additional advanced performance capabilities, it makes sense to include them in the DXOMARK scores and rankings. Advanced zoom capability and portrait mode are two of the most popular new features that have been added to smartphones in recent years. If they are not important to you, then of course don’t worry about those sub-scores and instead rely on our other sub-scores for each device.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.