Thanks to innovative technologies such as dual cameras and multi-image stacking, modern smartphone cameras can match traditional cameras in many areas. Add ultimate portability to the mix, and it is no surprise that smartphones have become very popular among amateur documentary, landscape, and street photographers. Even some professional photographers and journalists on occasion use the device in their pocket for paid assignments.

However, there is one type of photography that has so far firmly remained in the domain of DSLRs and other large-sensor camera systems: portraiture. In portrait photography, a photographer will typically accentuate the subject by capturing it in front of an out-of-focus background. Thanks to the laws of physics, this is easily achieved with a fast lens on a full-frame sensor camera. Smartphones, on the other hand, struggle to blur the background in an image. With their small image sensor, they produce an almost infinite depth of field, rendering the background almost just as sharp as the subject itself. The difference between DSLR and smartphone portraits is immediately obvious — even to people who are not photography experts.

This all said, the scene is currently shifting, with smartphone manufacturers working on ways to computationally simulate the shallow depth of field and bokeh that DSLR cameras and lenses can provide. This is why we decided to include tests for this new feature in our updated DxOMark Mobile testing protocol. We now test shallow depth-of-field simulation (so-called bokeh modes) in a laboratory setup, looking at both the quality of the bokeh (depth of field and shape), as well as at the artifacts often induced when isolating a subject. In this article, we describe our approach to testing computational bokeh on smartphones. (If you are interested in more details, you can download our scientific paper on the subject here. For more details about the technologies used for computational bokeh processing and their impact on the performance, please read our article on disruptive technologies in smartphone imaging.)

What is bokeh?

Bokeh originally refers to the aesthetic quality of blur in the out-of-focus parts of an image, especially out-of-focus points of light. Unlike depth of field, it has no precise definition. Aperture blades, optical vignetting, and spherical and chromatic aberrations all have an impact on how a lens renders bokeh. More recently, the term has become synonymous with out-of-focus blur and commonly includes the size of out-of-focus points of light. A larger radius for these points means stronger bokeh.

Computational bokeh on smartphones

To simulate the bokeh and shallow depth of field of DSLRs, some current high-end smartphone feature a secondary rear camera with a longer (35mm-equivalent) focal length lens than the lens on the main camera. The longer lens on its own isn’t capable of generating a DSLR-like bokeh, however. While it is a significant improvement in terms of perspective, its depth of field can be even wider than that of the main camera’s lens. This is because smartphone manufacturers have to use very small sensors and apertures to squeeze a lens with a longer equivalent focal length into the slim form factor of modern smartphones. As a result, the depth of field of the telephoto lens can be twice as wide as that of the lens on the main camera.

This is why smartphones require advanced image processing to computationally generate a background-blur. Doing so isn’t without challenges, however. Instead of a binary separation between subject and background, which only works for very simple scenes occupying only two planes, most situations require a high-quality depth map to generate a natural-looking bokeh effect that is free of artifacts.

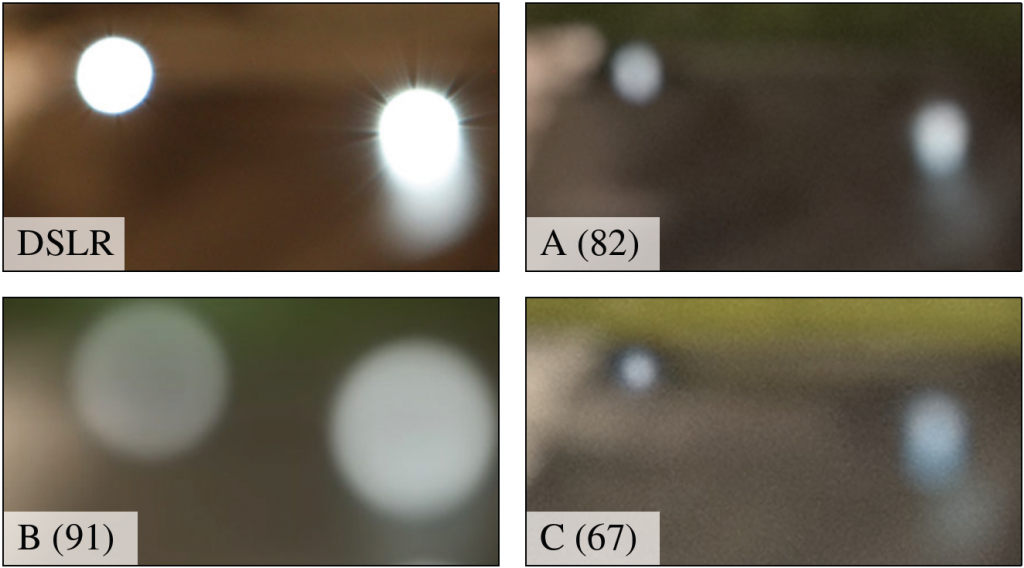

Creating the depth map is only one part of the story, though. In addition, blur has to be applied to out-of-focus areas in a convincing way, and that’s more complex than simply applying gaussian blur and making its radius a parameter of depth. For example, with an optical bokeh, light spots in a scene are not typically blurred. Instead they usually look like the shape of the lens aperture (which is usually circular). While this is not ideal for blurring distracting background details, people are used to this particular look and associate it with professionally-shot photographs and movies.

Specular highlights in out-of-focus areas are another example. With optical bokeh, they hit the sensor as large blur spots, covering a large number of pixels. On smartphones, however, they are concentrated on only a few pixels, which tend to saturate. The true intensity of the highlight is therefore lost.

Smartphone manufacturers have come up with a number of approaches to computational bokeh, using a range of methods and technologies, including focus stacking, structure from motion, dual cameras (stereo vision), dual pixels, and even dedicated depth sensors. Our goal at DxOMark was to develop a testing methodology that would allow for comparison between all tested smartphones (including those tested in the past) and DSLRs.

The DxOMark approach to bokeh testing: Perceptual evaluation

When developing previous tests, we found that the most reliable and robust “tool” for evaluating complex image elements, such as grain on human skin or the texture of human hair, is the human visual system. However, the downside to using the human visual system for image quality evaluation is a lack of repeatability. This said, we can greatly improve repeatability by breaking down the complexity of the evaluation and by asking well-defined questions to yield highly repeatable results using perceptual evaluation.

For example, to assess noise, we present a particular detail in our lab scene and ask trained people to evaluate the noise (and only the noise) compared to a stack of reference images. The repeatability can be further improved when we repeat this test for several details in the scene. This “lab scene method” combines the repeatability of traditional test charts with the robustness of human perception against all kinds of image processing trickery. It correlates better with real-world image quality experiences than evaluations based purely on test charts.

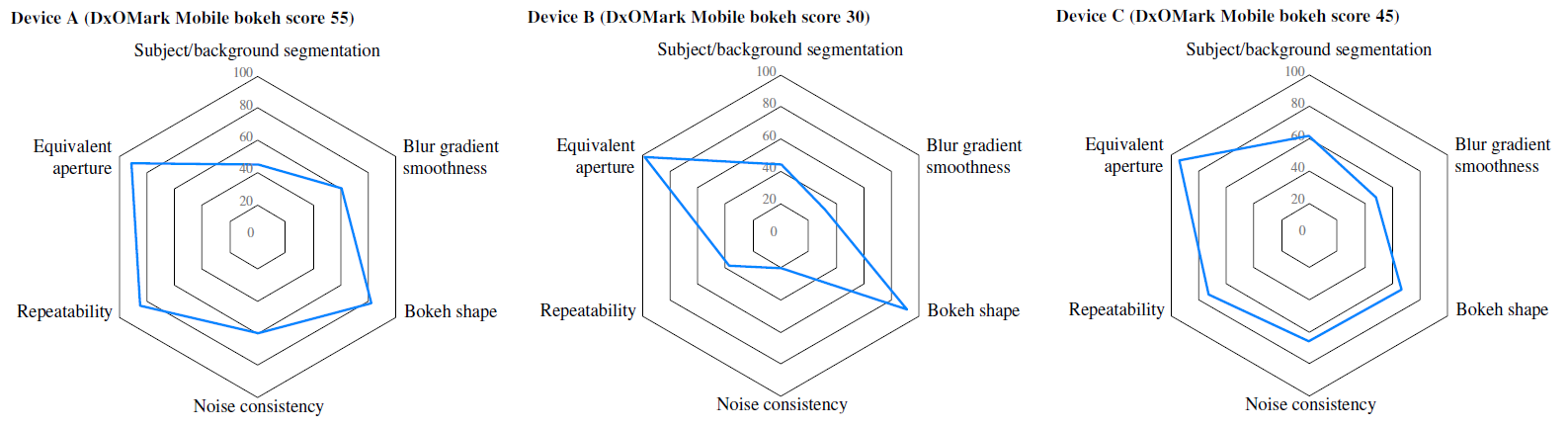

We have applied our lab scene method to the problem of computational bokeh for testing and scoring, using a three-dimensional test scene (shown in the image above) and the following evaluative criteria:

- Subject/background segmentation: Precise segmentation between subject and background is key to obtaining DSLR-like results. Current implementations suffer from depth-map resolution that is too low, leading to imprecise edges and depth estimation errors. The latter usually result in sharp zones in the background and/or blurred zones on the subject, particularly when capturing moving scenes.

- Equivalent aperture: When looking at computational bokeh, the equivalent aperture no longer depends on the physical characteristics of the camera, but rather on the image processing applied. We estimate this value by comparing the level of blur in certain areas of our test scene to that of a full-frame DSLR at different apertures.

- Blur gradient: Blur intensity should change with depth. A basic portrait might consist of only two planes that are more or less parallel to the sensor plane, but most scenes have a more complex three-dimensional composition. Smooth blur gradients are therefore important to achieve a DSLR-like bokeh.

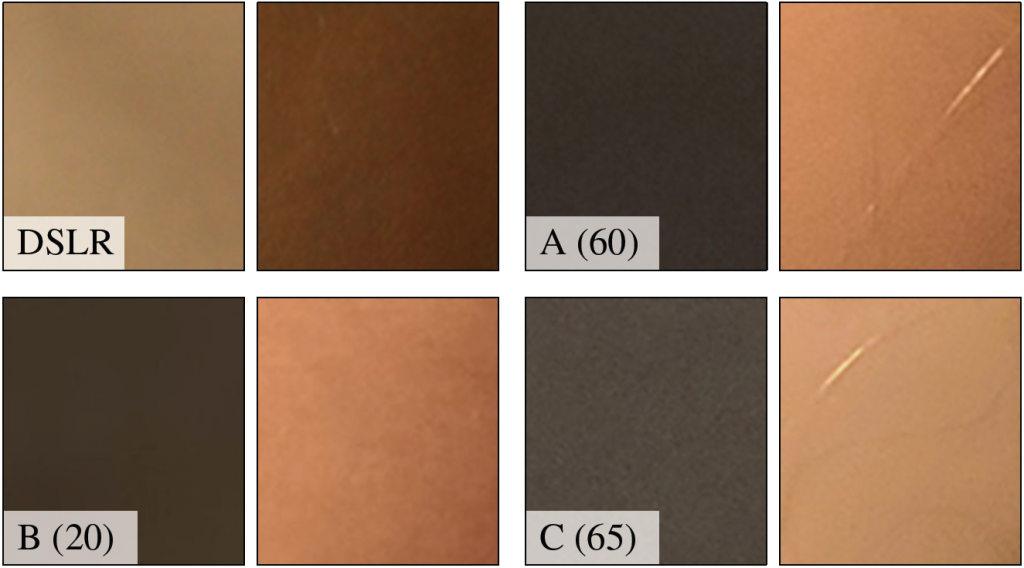

- Noise consistency: When looking at computation bokeh images in more detail, we can observe an interesting side effect of the simulation — namely, that the computationally blurred areas are totally free of grain. This comes as little surprise, as blurring is a well-known denoising technique. However, it leads to the bokeh having an unnatural appearance, since a DSLR optically creates blur before the light rays even hit the sensor. Noise is therefore the same in in-focus and out-of-focus areas of the image.

- Bokeh character: “Character” is what photographers have in mind when discussing bokeh. Unfortunately, there is no general consensus on what a perfect bokeh should look like. That said, computational bokeh seems to be simpler than the optical variant in this respect. No current smartphones simulate optical vignetting, which causes the bokeh shape to vary across the frame, nor do they imitate non-circular irises, which create a non-circular bokeh shape. Nor are there any purple or green fringes (caused by chromatic aberrations), “soap-bubbles” (caused by over-corrected spherical aberrations), or “donuts” (caused by catadioptric lenses). Some photographers rely on these effects to achieve a particular look, but smartphone manufacturers seem to think that they do not appeal to the average user, and so deliver circular shapes of varying sharpness.

- Repeatability: Optical bokeh is independent of exposure. Computational bokeh, however, tends to work best in bright conditions. More light increases the signal-to-noise ratio in the images and facilitates the computation of the depth map. But even under consistent lighting, we find that many devices behave in an unstable way. Without any obvious reason, some artifacts are visible on one image but not on another taken immediately afterwards. Sometimes bokeh mode fails altogether and the image is captured without any simulated background blur whatsoever.

Evaluating and measuring bokeh

This section describes how we actually measure and score the test criteria discussed above in our lab scenes. For the DxOMark Mobile protocol, we complement the lab measurements with a set of indoor and outdoor “real-life” test scenes. These are less repeatable than the studio scenes, but having a large variation of images allows us to double-check and confirm the lab results.

Subject/background separation

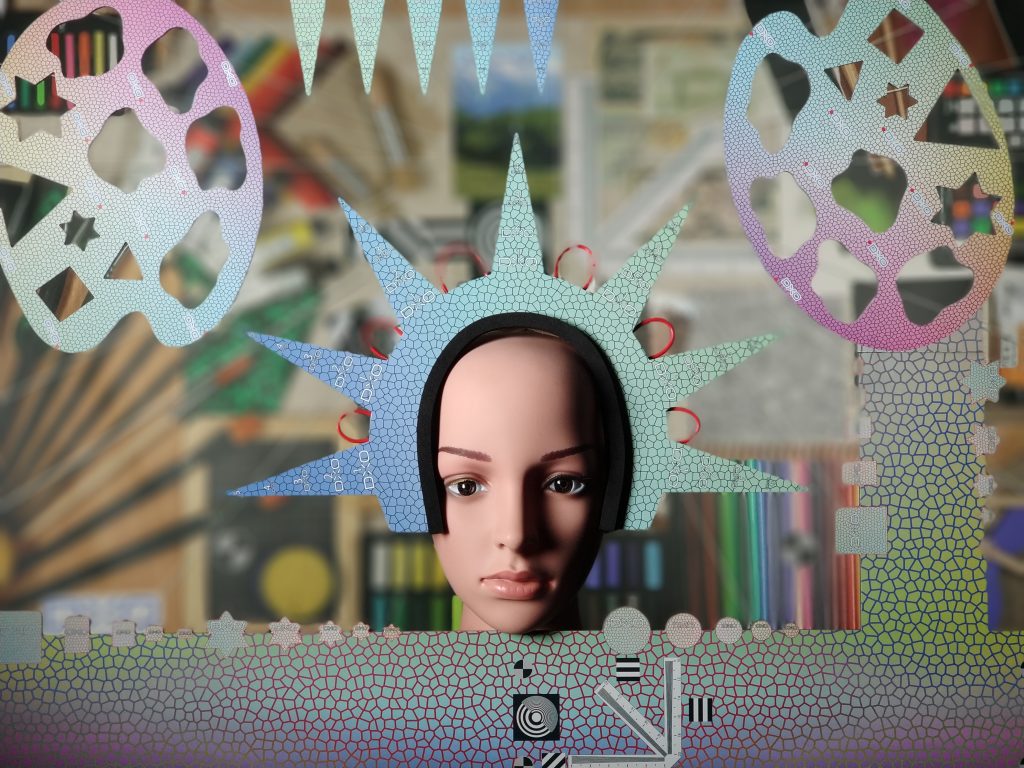

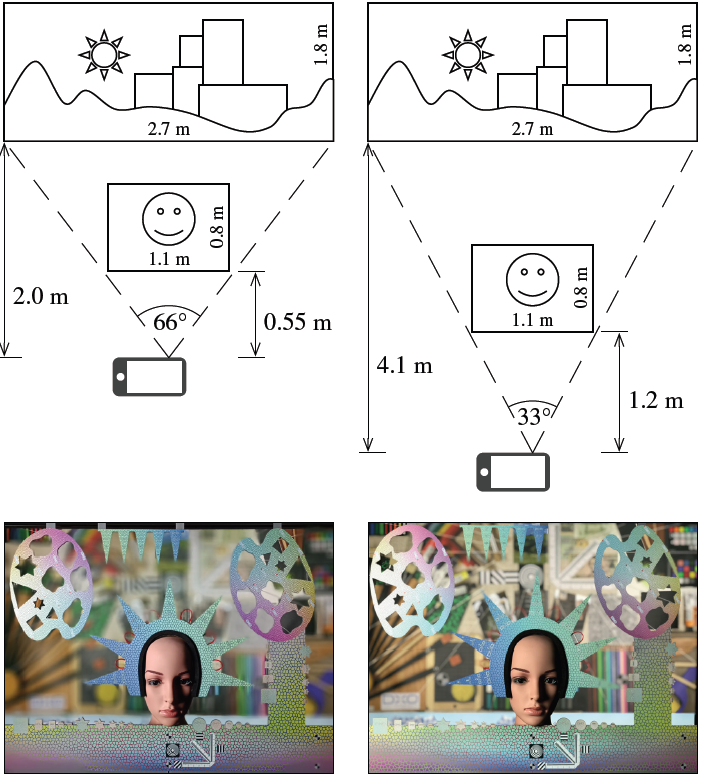

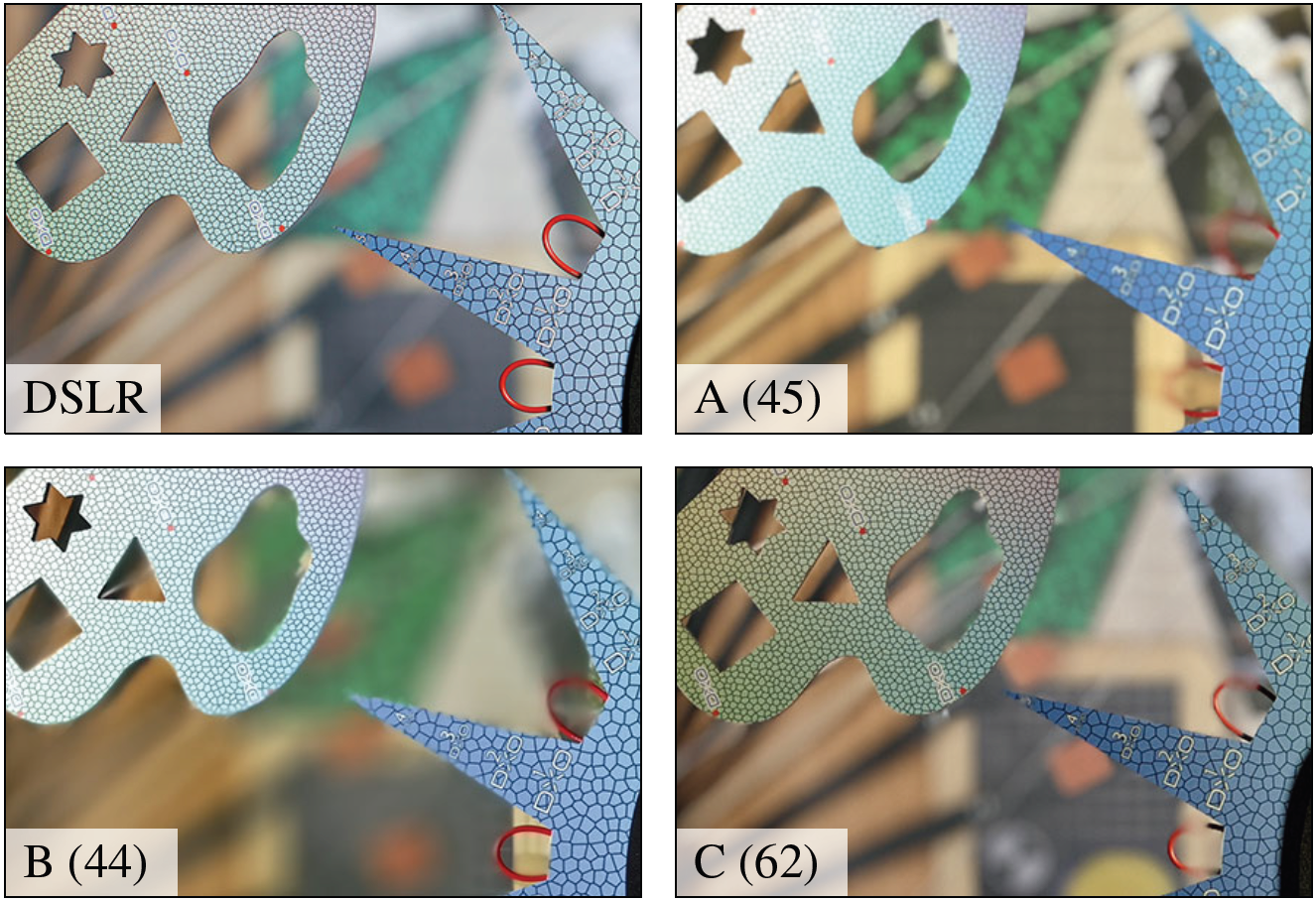

Our lab setup consists of two planes, a subject and background, with the distance adjustable between the two. We first measure the equivalent focal length of the test device, an then we adjust the distances among the device, the subject, and the background accordingly (figure 10). Computer-controlled lighting ensures uniform illumination on both planes, independent of focal length. The main subject in our scene is a mannequin head surrounded by a complex shape to simulate a person’s face, headdress, and a waving hand. The background is a large-format print of our noise and details lab scene, with the addition of several straight white lines.

The variety of features on both the subject plane and in the background allow for us to quantitatively evaluate the subject/background segmentation in terms of both precision and reliability. For example:

- One point is scored for each of the white straight lines in the background if blurred along entire length

- One point is scored for each of the holes in the “hand” on the top right that is is as blurry as the rest of the background

- Each of the spikes surrounding the face has small numbers from 1 to 5 printed on it. One point is scored for each number that is readable. An extra point is scored if the number is as sharp as the rest of the subject.

Blur quality

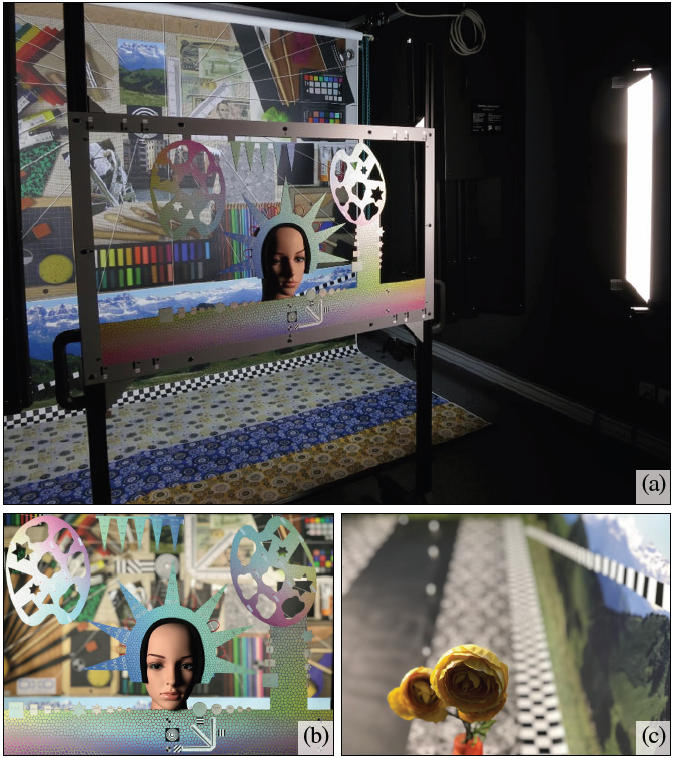

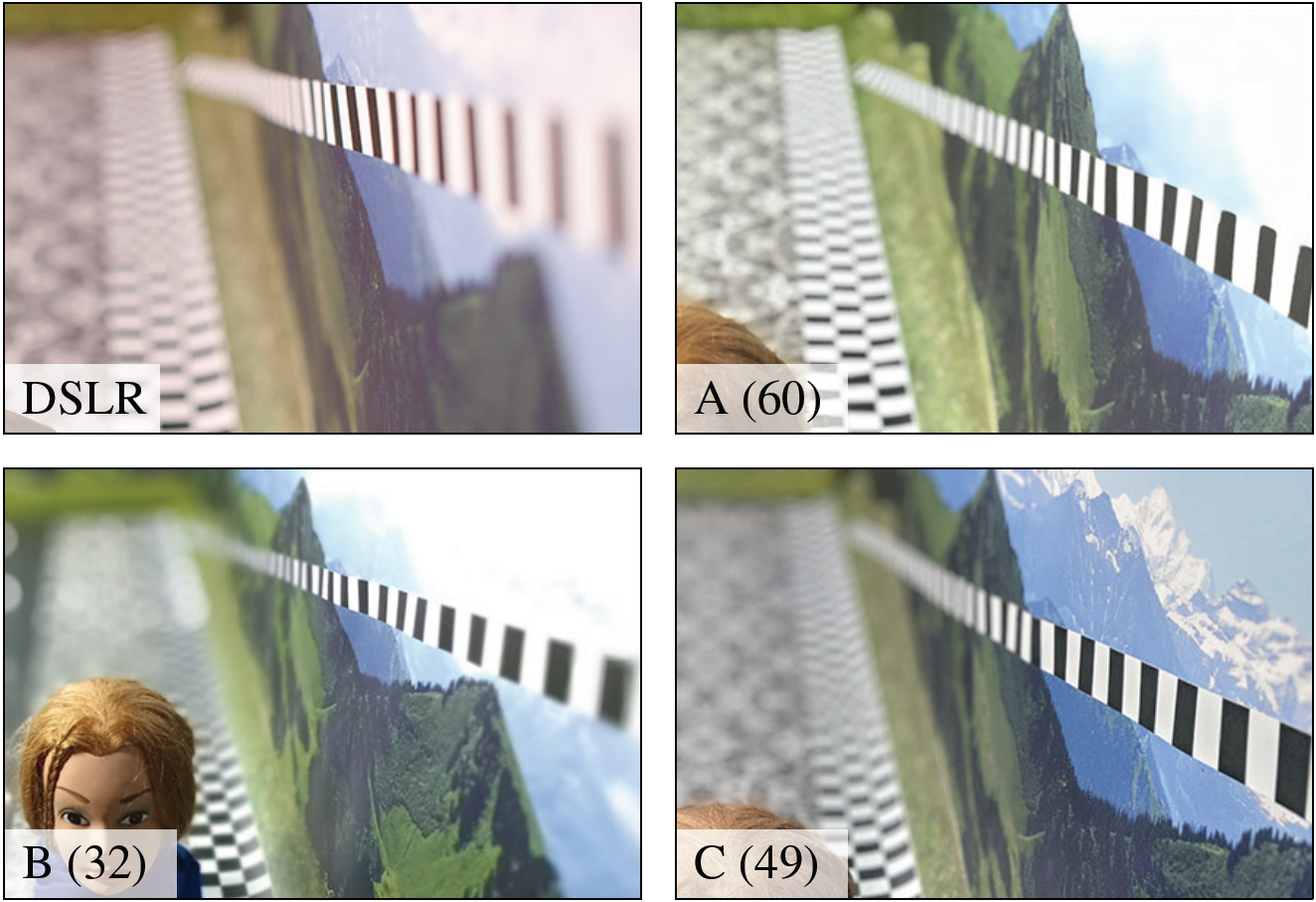

To analyze the quality of blur, we use a second scene which consists of a portrait subject (mannequin head) or macro object (plastic flower) in the foreground, and two additional planes on the right and at the bottom that are almost parallel to the optical axis. These planes are covered with regular patterns and extend in front of the subject. In the far back, we place some tiny LEDs that serve as points of light. This scene allows us to evaluate all of the criteria described in the previous section, apart from subject/background separation.

To determine the equivalent aperture of the bokeh simulation mode, we shoot the scene with a full-frame DSLR at various focal lengths and apertures. The smartphone image is then compared to these reference shots.

We observe blur gradient smoothness on the regular patterns at the bottom and on the line of black and white squares on the right in the scene. Having the same pattern at all distances reveals even small inconsistencies that would go unnoticed in many real-world scenes. We analyze noise consistency by comparing gray patches on the in-focus subject and in the background. Any differences in terms of grain will be the result of computational bokeh processing.

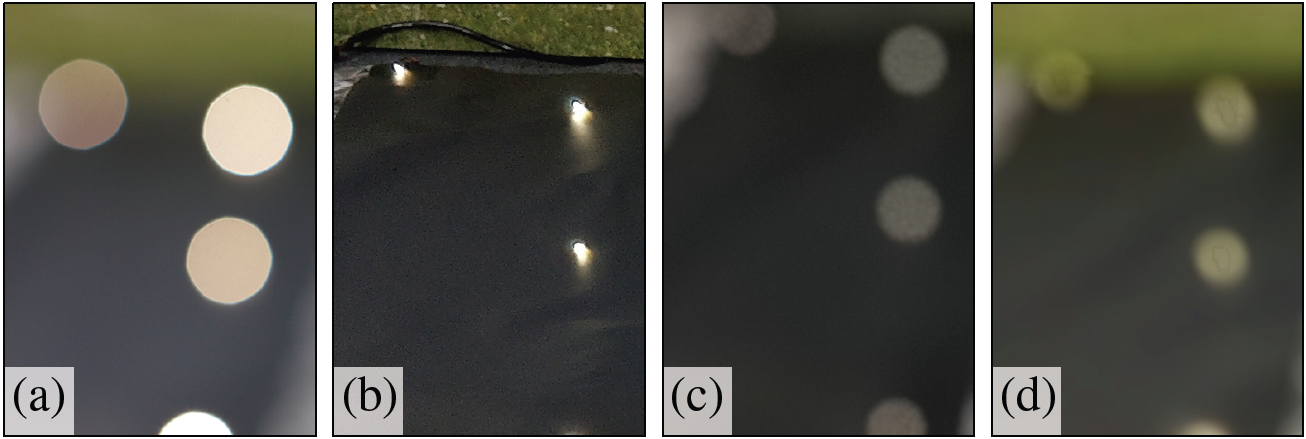

We can analyze bokeh shape by looking at the LED light spots in the background. With our current approach, we are looking for a circular shape with rather sharp borders. Artifacts such as those shown in the smartphone results below should be avoided, as they do not resemble the optical bokeh of any lens. But this also means that DSLRs will not necessarily achieve the highest score in this category, given that their optical bokeh is not always circular.

Repeatability

To evaluate repeatability, we capture a number of test scenes. For each scene, we capture five test samples at two levels of illumination, 1000 Lux and 50 Lux. We then perceptually assess the repeatability by comparing images of the same scene variation.

Computation of results

The individual measurements obtained during the testing process are aggregated into sub-scores using empiric formulas. These sub-scores are then aggregated into a single-value bokeh score. The scoring system is designed to award the best scores to devices that provide the best trade-off between bokeh quality and repeatability. The theoretical best score, attained by a DSLR with a good fast lens and circular aperture, is 100. Current smartphones differ by the hardware they use and by the processing and tuning applied. They can’t (yet) match DSLRs, but results from the current crop of high-end phones are already quite impressive. You can find scores and sample images for high-end smartphones from 2015 to 2017 in the bokeh section of our article on disruptive technologies in smartphone cameras.

While we use DSLRs as a reference, in some situations computational bokeh has the potential to outperform the optical equivalent. For example, in a group portrait, in which the subjects’ faces are located on slightly different planes, a DSLR-photographer would be required to stop down the lens in order to make sure all the faces are sharp, but this would also significantly reduce background blur. Computational bokeh, however, is not subject to the laws of physics. A smartphone camera could combine a depth of field that is wide enough to have all faces sharp and still have strong background blur. We are doing research into evaluation methods that can take such use cases into account. In the meantime, if you are interested in more details on the subject, you can download our scientific paper on “Image quality benchmarks for computational bokeh.”

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.