We put the Google Pixel 6 Pro through our rigorous DXOMARK Selfie test suite to measure its performance in photo and video from an end-user perspective. This article breaks down how the device fared in a variety of tests and several common use cases and is intended to highlight the most important results of our testing with an extract of the captured data.

Overview

Key front camera specifications:

- 11.1 MP sensor, 1.22 μm pixels

- f/2.2 aperture

- 94° field of view

- Fixed focus

- 4K/30 fps, 1080p/30fps

Scoring

Sub-scores and attributes included in the calculations of the global score.

Google Pixel 6 Pro

18th

18th 16th

16thPros

- Generally good exposure in photo and video

- Accurate white balance and nice color

- Well-controlled noise

- Pretty wide dynamic range in video

- Neutral white balance and nice skin tones in video

- Effective video stabilization

The Google Pixel 6 Pro offers the best Selfie camera currently available in the US market, besting such esteemed competition as Apple’s new iPhone 13 series or the Samsung Galaxy S21 Ultra. It also takes a position very close to the top in our global ranking where it is only surpassed by the recent phones from Huawei.

The excellent Photo score is based on a great performance for exposure and color. Google’s HDR+ system delivers nicely exposed portrait subjects and good contrast, even in scenes with strong backlighting. Skin tones are rendered nicely for any type of skin and in all light conditions. Image artifacts are overall very well under control, too.

The video score is also one of the best we have seen. Stabilization stands out in this category, with excellent stabilization when handholding the device and of movement of the face in the frame. Dynamic range is good, too, but not quite on the same high level as the latest Apple devices.

Overall the Pixel 6 Pro’s front camera hardware design delivers an excellent trade-off between a wide depth of field that keeps all subjects in group shots in focus, and high light sensitivity, which helps produce good image quality in difficult low light scenes. It’s therefore an easy recommendation to any passionate selfie shooter.

Test summary

About DXOMARK Selfie tests: For scoring and analysis, DXOMARK engineers capture and evaluate more than 1,500 test images both in controlled lab environments and in outdoor, indoor and low-light natural scenes, using the front camera’s default settings. The photo protocol is designed to take into account the user’s needs and is based on typical shooting scenarios, such as close-up and group selfies. The evaluation is performed by visually inspecting images against a reference of natural scenes, and by running objective measurements on images of charts captured in the lab under different lighting conditions from 1 to 1,000+ lux and color temperatures from 2,300K to 6,500K. For more information about the DXOMARK Selfie test protocol, click here. More details on how we score smartphone cameras are available here. The following section gathers key elements of DXOMARK’s exhaustive tests and analyses .Full performance evaluations are available upon request. Please contact us on how to receive a full report.

Photo

Google Pixel 6 Pro

149

Exposure

Google Pixel 6 Pro

99

Color

Google Pixel 6 Pro

110

Exposure and color are the key attributes for technically good pictures. For exposure, the main attribute evaluated is the brightness of the face(s) in various use cases and light conditions. Other factors evaluated are the contrast and the dynamic range, eg. the ability to render visible details in both bright and dark areas of the image. Repeatability is also important because it demonstrates the camera's ability to provide the same rendering when shooting consecutive images in a row.

For color, the image quality attributes analyzed are skin-tone rendering, white balance, color shading, and repeatability.

These samples show the Google Pixel 6 Pro’s exposure performance in bright light. Target exposure is generally accurate and more consistent across consecutive shots than on the competitors. Dynamic range is fairly wide and shadow contrast is better than on the comparison devices.

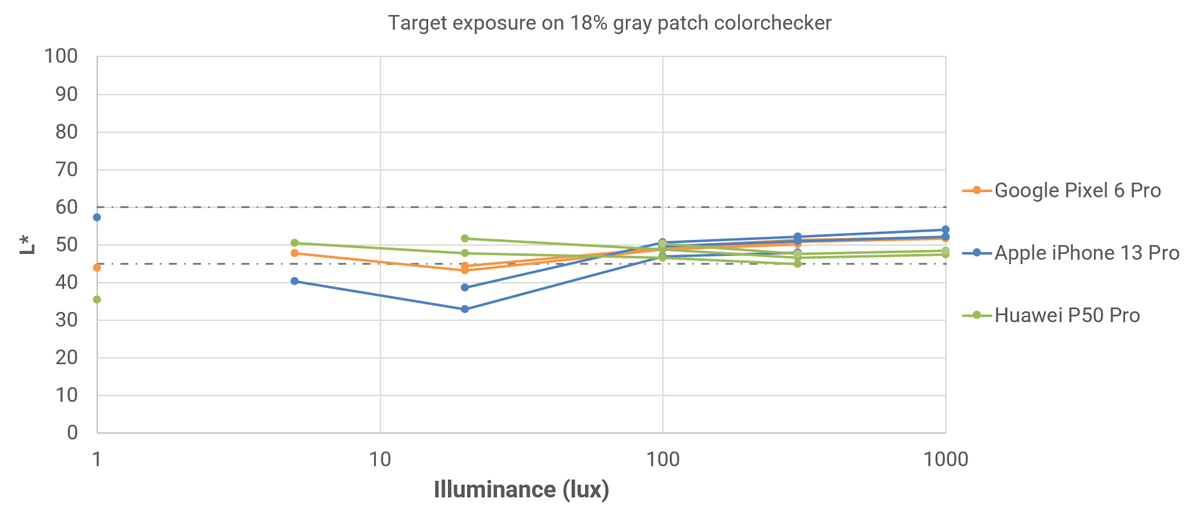

This graph shows the Google Pixel 6 Pro’s exposure performance across light levels.

These samples show the Google Pixel 6 Pro’s color performance in bright light. Skin tones and color are generally accurate. While many devices struggle to produce accurate white balance in scenes with monochromatic backgrounds, the Pixel 6 Pro delivers better results in such conditions than the iPhone 13 Pro and Huawei P50 Pro.

These samples show the Google Pixel 6 Pro’s color performance in an indoor setting. In this kind of scene, white balance is generally neutral with nice skin tones across all types of skins, even in challenging high-contrast shots. The Apple iPhone 13 Pro generally has a white balance cast with orange skin tone rendering.

Focus

Google Pixel 6 Pro

105

Autofocus tests evaluate the accuracy of the focus on the subject’s face, the repeatability of an accurate focus, and the depth of field. While a shallow depth of field can be pleasant for a single-subject selfie or close-up shot, it can be problematic in specific conditions such as group selfies; both situations are tested. Focus accuracy is also evaluated in all the real-life images taken, from 30cm to 150cm, and in low light to outdoor conditions.

These samples show the Google Pixel 6 Pro’s focus performance at a subject distance of 30 cm. At this close distance, the face is slightly out of focus, with lower sharpness than the comparison devices. At 120 cm (selfie stick distance) sharpness is on the same level as the iPhone 13 Pro.

Texture

Google Pixel 6 Pro

79

Texture tests analyze the level of details and the texture of subjects in the images taken in the lab as well as in real-life scenarios. For natural shots, particular attention is paid to the level of details in facial features, such as the eyes. Objective measurements are performed on chart images taken in various lighting conditions from 1 to 1000 lux and different kinds of dynamic range conditions. The charts used are the proprietary DXOMARK chart (DMC) and the Dead Leaves chart.

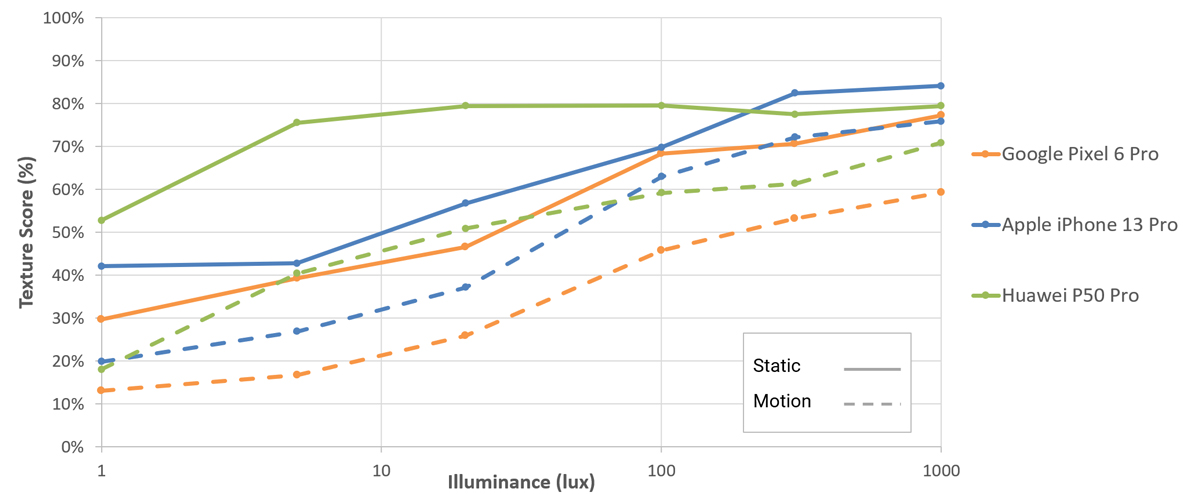

This graph shows the Google Pixel 6 Pro’s texture performance in the lab across different light levels. Measured texture acutance is slightly lower than for the iPhone and Huawei, especially in scenes with motion. This results in more loss of fine detail than on the comparison devices.

These samples show the Google Pixel 6 Pro’s texture performance indoors.

Noise

Google Pixel 6 Pro

94

Noise tests analyze various attributes of noise such as intensity, chromaticity, grain, and structure on real-life images as well as images of charts taken in the lab. For natural images, particular attention is paid to the noise on faces, but also on dark areas and high dynamic range conditions. Objective measurements are performed on images of charts taken in various conditions from 1 to 1000 lux and different kinds of dynamic range conditions. The chart used is the DXOMARK Dead Leaves chart and the standardized measurement such as Visual Noise derived from ISO 15739.

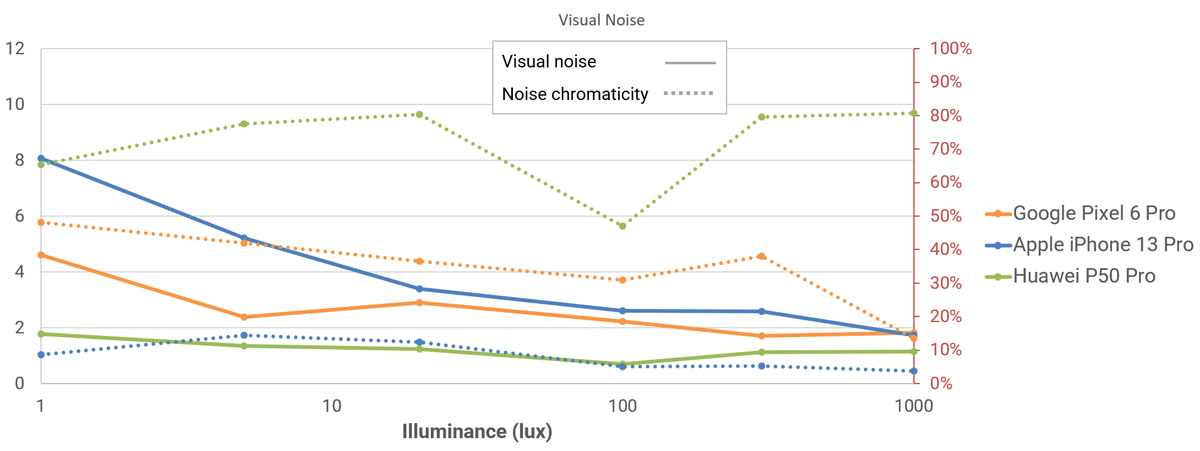

This graph shows the Google Pixel 6 Pro’s noise performance in the lab across different light levels.

Visual noise is a metric that measures noise as perceived by end-users. It takes into account the sensitivity of the human eye to different spatial frequencies under different viewing conditions.

Artifacts

Google Pixel 6 Pro

91

The artifacts evaluation looks at lens shading, chromatic aberrations, distortion measurement on the Dot chart and MTF, and ringing measurements on the SFR chart in the lab. Particular attention is paid to ghosting, quantization, halos, and hue shifts on the face among others. The more severe and the more frequent the artifact, the higher the point deduction on the score. The main artifacts observed and corresponding point loss are listed below.

Overall our testers observed few artifacts on the Pixel 6 Pro and in this respect, the Google phone does better than many of its competitors. In these samples you can see ghosting artifacts and white spots in low light.

Bokeh

Google Pixel 6 Pro

80

Bokeh is tested in one dedicated mode, usually portrait or aperture mode, and analyzed by visually inspecting all the images captured in the lab and in natural conditions. The goal is to reproduce portrait photography comparable to one taken with a DSLR and a wide aperture. The main image quality attributes paid attention to are depth estimation, artifacts, blur gradient, and the shape of the bokeh blur spotlights. Portrait image quality attributes (exposure, color, texture) are also taken into account.

These samples show the Google Pixel 6 Pro’s bokeh mode performance in an outdoor scene.

Video

Google Pixel 6 Pro

156

DXOMARK engineers capture and evaluate more than 2 hours of video in controlled lab environments and in natural low-light, indoor and outdoor scenes, using the front camera’s default settings. The evaluation consists of visually inspecting natural videos taken in various conditions and running objective measurements on videos of charts recorded in the lab under different conditions from 1 to 1000+ lux and color temperatures from 2,300K to 6,500K.

Exposure

Google Pixel 6 Pro

87

Color

Google Pixel 6 Pro

90

Exposure tests evaluate the brightness of the face and the dynamic range, eg. the ability to render visible details in both bright and dark areas of the image. Stability and temporal adaption of the exposure are also analyzed. Image-quality color analysis looks at skin-tone rendering, white balance, color shading, stability of the white balance and its adaption when light is changing.

Video target exposure is generally accurate, even in low light. Dynamic range is fairly wide but not as wide as on the Apple iPhone 13 series. These video samples show the Google Pixel 6 Pro’s video exposure performance in outdoor conditions.

In video, the camera usually produces nice color and skin tones with a neutral white balance. These video samples show the Google Pixel 6 Pro’s video color performance in an outdoor scene.

Texture

Google Pixel 6 Pro

97

Texture tests analyze the level of details and texture of the real-life videos as well as the videos of charts recorded in the lab. Natural video recordings are visually evaluated, with particular attention paid to the level of detail on the facial features. Objective measurements are performed of images of charts taken in various conditions from 1 to 1000 lux. The chart used is the Dead Leaves chart.

These video samples show the Google Pixel 6 Pro’s video texture performance under 1000 lux lighting conditions and at a subject distance of 55cm.

Noise

Google Pixel 6 Pro

83

Noise tests analyze various attributes of noise such as intensity, chromaticity, grain, structure, temporal aspects on real-life video recording as well as videos of charts taken in the lab. Natural videos are visually evaluated, with particular attention paid to the noise on faces. Objective measurements are performed on the videos of charts recorded in various conditions from 1 to 1000 lux. The chart used is the DXOMARK visual noise chart.

Noise is generally visible on Google Pixel 6 Pro video cls, especially in low light. The Huawei P50 Pro is able to output images with lower levels of noise in comparison.

These video samples show the Google Pixel 6 Pro’s video noise performance in low light conditions.

Stabilization evaluation tests the ability of the device to stabilize footage thanks to software or hardware technologies such as OIS, EIS, or any others means. The evaluation looks at overall residual motion on the face and the background, smoothness and jellow artifacts, during walk and paning use cases in various lighting conditions. The video below is an extract from one of the tested scenes.

Stabilization on the Google Pixel 6 Pro is generally effective, but some camera shake is still noticeable on faces when walking while recording. Overall the Pixel’s performance is quite similar to the P50 Pro. Both devices stabilize the background. In contrast, the iPhone 13 stabilizes the face and shows more camera shake. This sample clip shows the Google Pixel 6 Pro’s video stabilization in outdoor conditions.

Artifacts

Google Pixel 6 Pro

92

Artifacts are evaluated with MTF and ringing measurements on the SFR chart in the lab as well as frame-rate measurements using the LED Universal Timer. Natural videos are visually evaluated by paying particular attention to artifacts such as quantization, hue shift, and face-rendering artifacts among others. The more severe and the more frequent the artifact, the higher the point deduction from the score. The main artifacts and corresponding point loss are listed below

Some unnatural rendering artifacts are sometimes visible due to over-sharpening. Hue shifts close to clipped areas can be visible as well. This sample clip was recorded in the lab at 1000 lux.

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.