We at DxOMark started testing smartphone cameras back in 2012. It’s fair to say that smartphone cameras have come a long way in the five years that have passed since then. The smartphone has become the go-to imaging device for billions of hobby and occasional photographers around the globe, and thanks to drastically improved image quality and performance, it has left conventional standalone digital cameras far behind in terms of both sales and popularity.

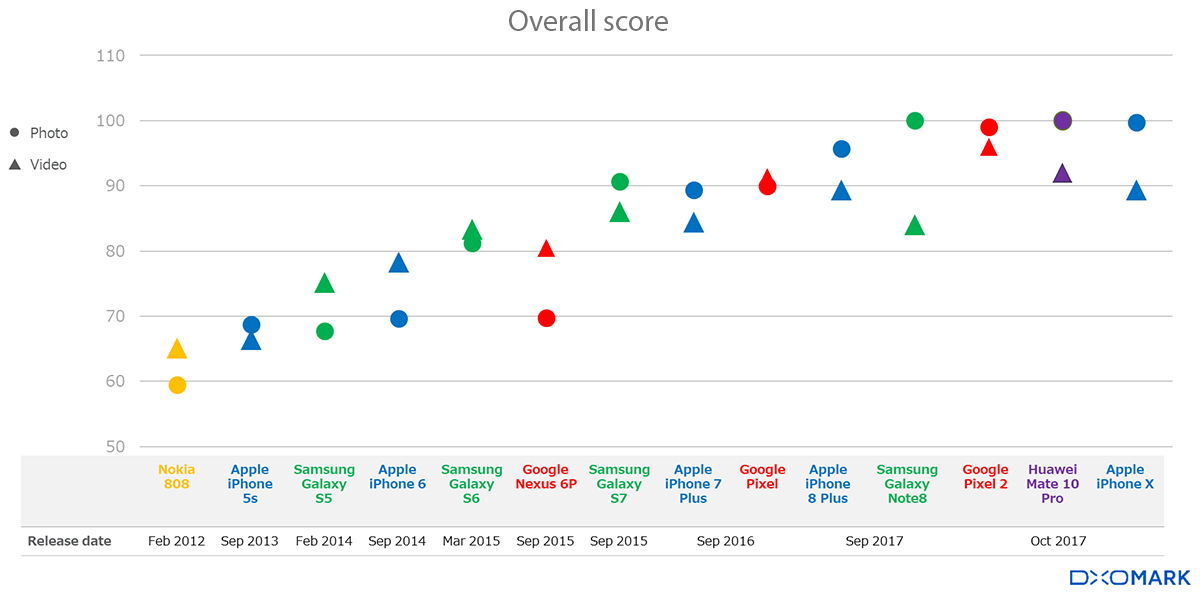

In the graph below, you can see the evolution of both Photo and Video scores from one of the first high-end devices we tested, the Nokia 808 PureView, to today’s flagship phones, such as the Google Pixel 2, the iPhone X, and the Samsung Galaxy Note 8. It’s not too much of a surprise that every generation from Apple, Samsung, and Google does better than the previous one, but with image quality reaching higher levels than ever, it has become increasingly difficult to make any noticeable improvements using “conventional” means.

Improvements in image sensor efficiency are only incremental these days, and while the makers of conventional digital cameras generally have the luxury of increasing the sensor size or the lens aperture (and therefore the lens dimensions) for better light capture and image quality, smartphone makers have to work within very tight space constraints. A thin device body and sleek design are key smartphone buying criteria for most consumers, but those criteria make camera design a lot more difficult. This has forced mobile device manufacturers to think outside the box and to develop solutions that improve image quality and camera performance without the need for larger camera modules. In this article, we look at how the introduction of these technologies — for example, dual-cameras, multi-frame-stacking, and innovative autofocus technologies — has had an impact on different aspects of smartphone camera performance, such as texture and noise, exposure, autofocus, zoom quality, and the appearance of bokeh.

Photo noise and texture: It’s all about capturing light

Although in our smartphone camera tests we give separate scores for noise and texture, it is important to look at both criteria together, as they are strongly linked. Image noise can easily be reduced in image processing, but there is a trade-off: strong noise reduction will also blur fine textures and reduce detail. The input signal-to-noise ratio (SNR) of a camera increases with sensor size, lens aperture, and exposure time. As mentioned above, increasing either of the former two is not an option due to space limitations in smartphones. Longer shutter speeds, on the other hand, increase the risk of camera shake and motion blur, ruling them out in many situations as well.

There’s more to image quality than sensor size and pixel count

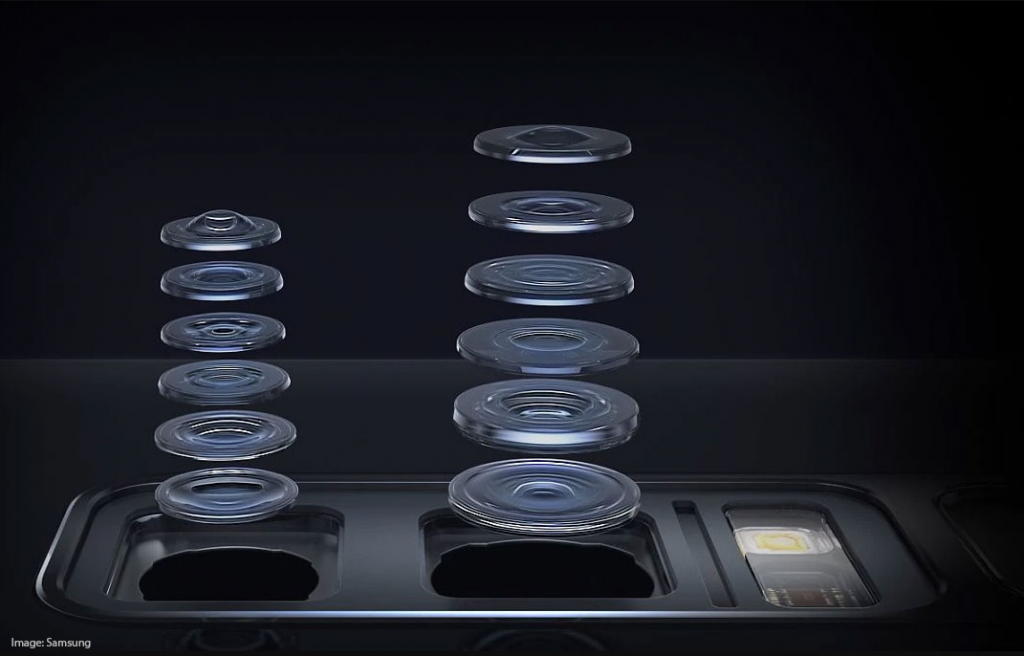

There are ways around those limitations, though. Image stabilization systems allow for longer shutter speeds with static scenes, and temporal noise reduction (TNR) combines image data from several frames, increasing the accumulated exposure time during capture. In addition, dual-cameras increase the (combined) sensor surface without a need for chunkier lenses. If a Bayer sensor is combined with a black-and-white sensor, as in the Huawei Mate 10 Pro, the gain is even greater. While a conventional dual-camera with two Bayer sensors simply captures twice as much light as a single-sensor camera, the Huawei, at least in theory, approximately quadruples the light capture of a single-sensor setup because of the lack of a color filter in its black-and-white sensor. This helps a lot in terms of texture preservation, but the downside is that capturing color information in low light can be more difficult.

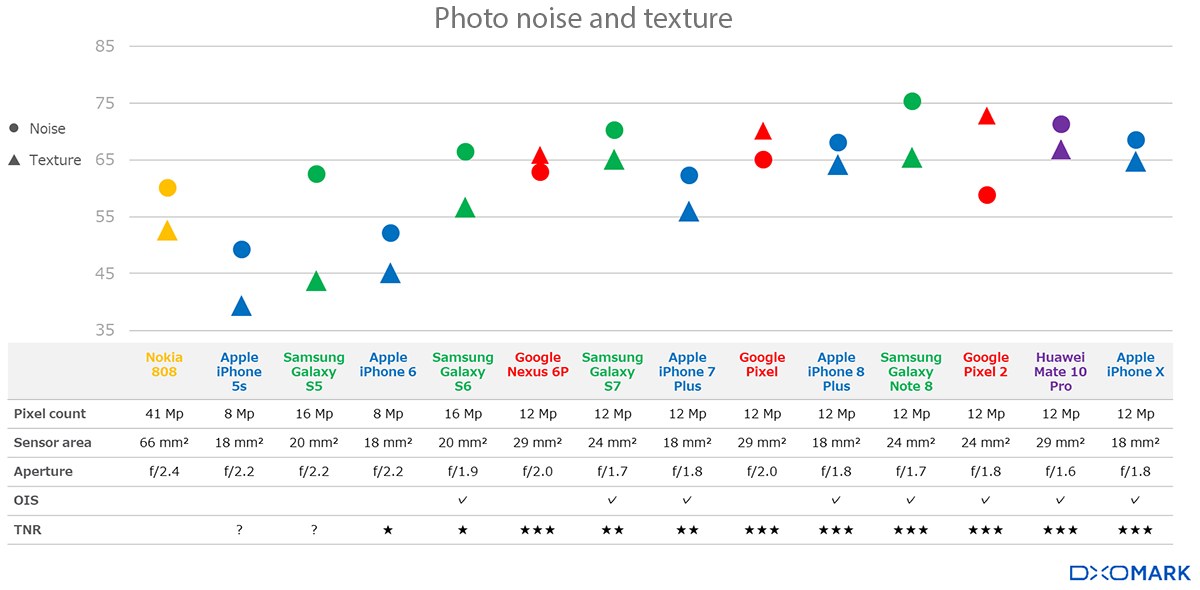

In the graph below, you can see the noise and texture scores of some key devices we have tested in the past five years. In the table underneath, we have listed the camera specifications. A lot of marketing material puts the focus on pixel count and sensor size. However, as you can see in the graph, neither of those two specifications is a reliable criterion for image quality, given that large apertures, optical image stabilization, and temporal noise reduction all make significant contributions as well.

The oldest device in our list, the Nokia 808, is great example for demonstrating that a large sensor helps achieve detailed textures and low levels of noise. The Nokia has an impressive 41 Mp sensor resolution, but in default mode, photos are downsampled to a 5 Mp output size. That said, even at 5 Mp, it achieves noticeably better low-light scores than the 8 Mp iPhone 5s and iPhone 6, and even the 16 Mp Samsung Galaxy S5. The reason is the ability of Nokia’s huge sensor to capture a lot of light.

Light capture also depends on the aperture, and while sensor sizes on smartphones have remained relatively unchanged over the years, manufacturers have been able to increase the size of the lens apertures considerably. The Samsung Galaxy S5 from 2015 already had a slightly faster aperture than the Nokia 808 (f/2.2 vs. f/2.4), and the latest Samsung device on our list comes with a much faster f/1.7 aperture. The Huawei Mate 10 Pro even features f/1.6 apertures on both its lenses. The fast apertures partly eradicate the Nokia 808’s sensor size advantage, and in combination with OIS and TNR, help the latest generation of devices achieve noticeably better results than the Nokia, despite much smaller image sensors.

OIS allows for longer exposures and therefore higher input SNR by stabilizing the image and reducing the risk of blur through camera shake. TNR adds to this by merging several image frames to remove noise without sacrificing texture. The technology also helps to reduce motion blur, as the individual frames used in the stacking process are captured at faster shutter speeds than in a conventional single image.

Image processing is making a difference

The iPhone 6 is a good example of how intelligent software processing can improve image quality. Looking at the chart, you can see it has less noise and better texture than its predecessor, the iPhone 5s, despite using the same imaging hardware. However, the newer model comes with Apple’s A8 chipset, which features an improved image signal processor (ISP). At the time, Apple did not provide any detailed information, but it is very likely that the A8 ISP supports TNR. One year later, in 2015, Google set a new benchmark with its HDR+ mode that was introduced on the Nexus 6P device. HDR+ merges up to 10 frames at the Raw level, bypassing the embedded ISP.

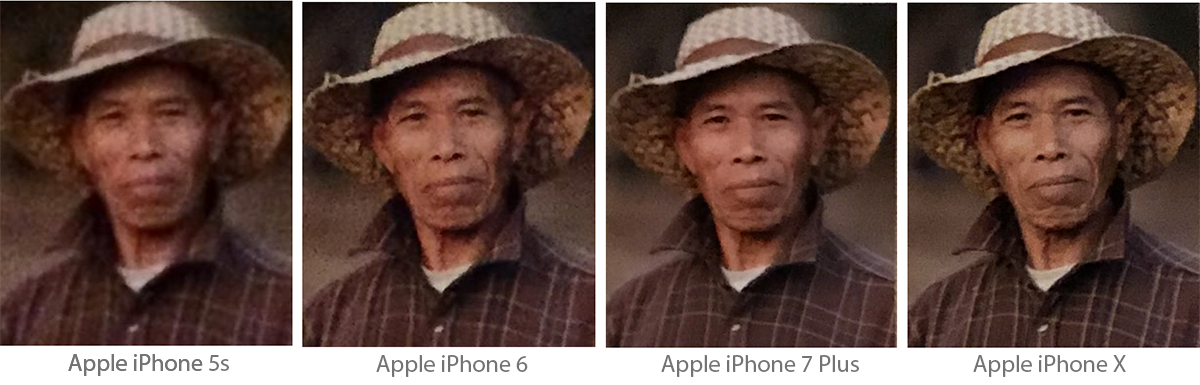

Let’s have a look at some samples. The crops below are from images taken at the low light level of 5 Lux. As you can see, detail increases very noticeably from the iPhone 5s to the iPhone 6. As mentioned above, sensor and lens components did not change between the two models, so the difference has to be due to advances in image processing. Surprisingly, there isn’t a huge difference between the iPhone 6 and 7 Plus in terms of detail, despite a higher pixel count, faster lens, and OIS for the latter. Apple managed to tune the 7 Plus’s image processing parameters in such a way that the 7 Plus’s images are less noisy than the 6’s, despite the higher 12Mp pixel count. On the flip side, however, the additional detail captured by the higher-resolution sensor is blurred away by noise reduction. Thanks to improved TNR algorithms, the iPhone X makes another visible step forward, reducing noise levels slightly compared to the 7 Plus, but having noticeably better fine detail.

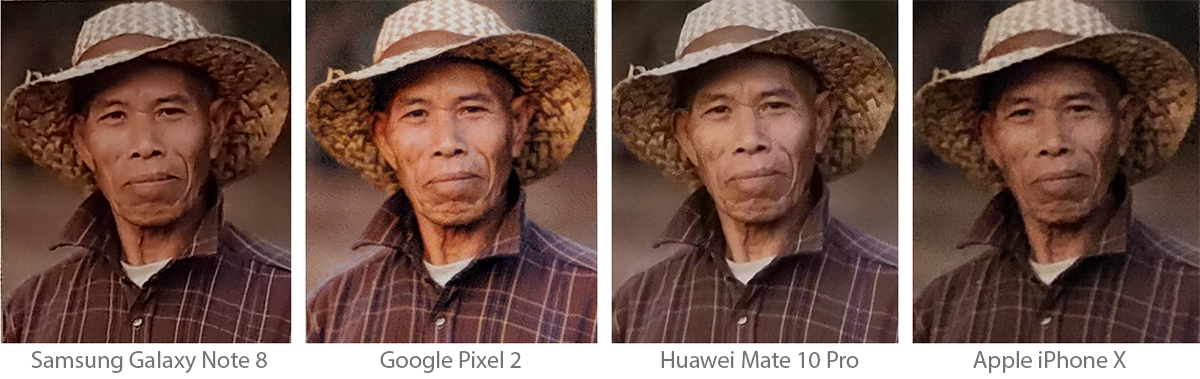

Below we can see a comparison of four current high-end smartphone cameras, the Samsung Galaxy Note 8, the Google Pixel 2, the Huawei Mate 10 Pro, and the Apple iPhone X. All devices achieved high overall scores in our testing, but the manufacturers clearly apply different image processing approaches to achieve those results. Looking at the score in the graph above, we can see that the noise and texture scores for the Samsung Galaxy Note 8 and the Google Pixel 2 are pretty much inverse. The overall performance is similar, but the two manufacturers cater to different tastes. Samsung uses a lot of noise reduction to produce a very clean image, but in the process also loses some fine textures. The Pixel 2 image shows noticeably more grain, but also better detail and more fine textures. The Samsung and Google represent opposite ends of the spectrum. Both the Huawei Mate 10 Pro and the Apple iPhone X apply a more balanced approach.

Photo exposure: Smartphone cameras are getting smarter

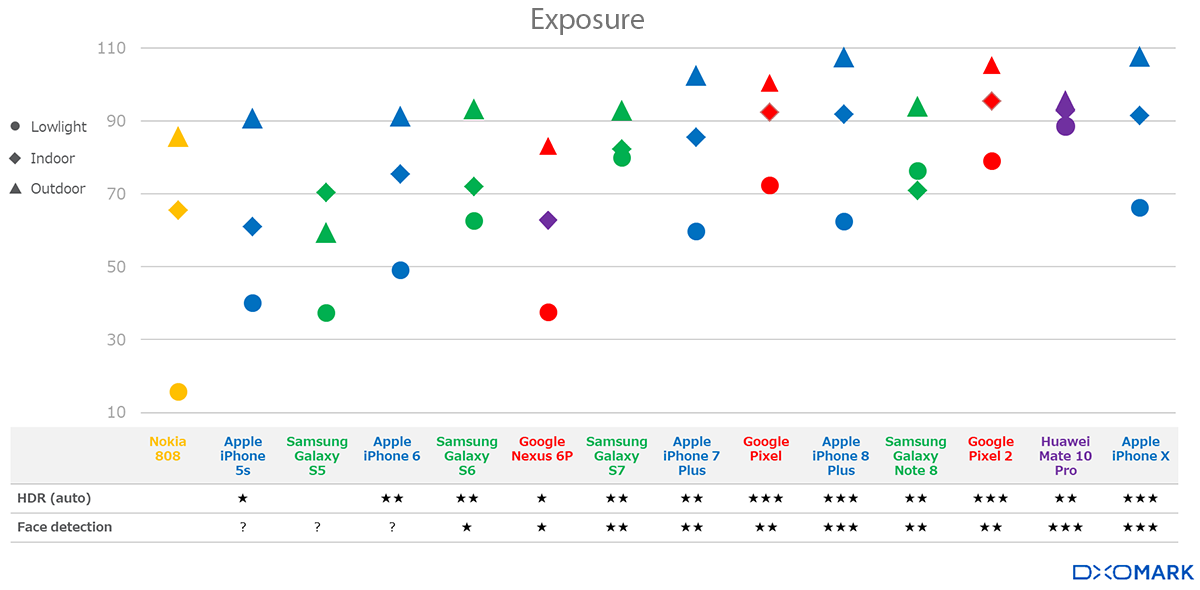

When evaluating exposure, we score image results in bright outdoor conditions, under typical indoor lighting, and in low light. In the score comparison graph below, we see that outdoor scores were already at a high level in 2012, but have increased further with the latest generation of devices. The increase is more substantial for indoor and low-light conditions, however.

Software and hardware are working together intelligently

These improvements are largely due to cameras getting smarter. The first cameras we tested did not have any smart features such as automatic detection of HDR scenes, scene analysis, or face detection, leading to exposure failures in real-world testing. Thanks to its unusually large sensor, the Nokia 808 has very good dynamic range, but was still outscored by the iPhone 5s because of the latter’s automatically-triggered multi-frame HDR feature. From the Galaxy S6 onwards, this has also been a standard feature on Samsung devices.

Manufacturers have been able to further refine HDR modes and scene analysis in the last couple of years, resulting in improved handling of difficult situations such as backlit portraits or high-contrast scenes. In addition, exposures in very low light have benefited from improved SNR, given that in very dim conditions it is common practice to underexpose in order to hide noise. That said, with its large sensor, the Nokia 808 could probably have done better in this respect, but the engineers simply transposed the exposure strategy from previous Nokia phones with smaller sensors, making the 808 a good example for a smartphone camera that could have benefited from better tuning.

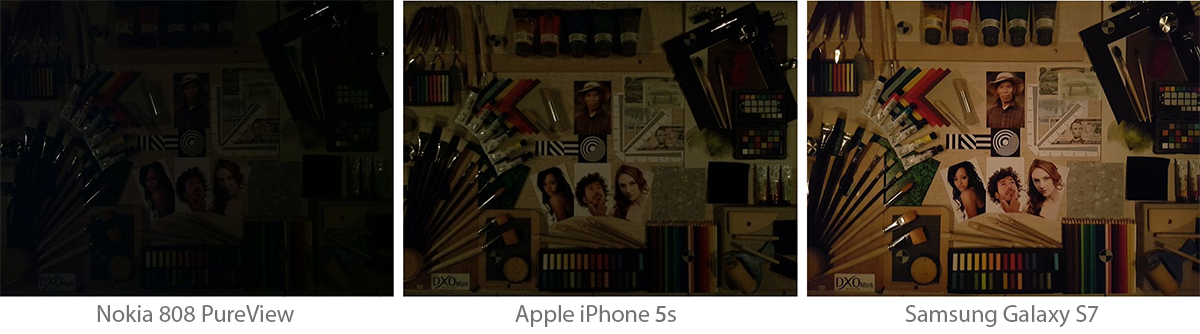

Let’s see how the numbers in the graph above translate into real-life image results.The samples below were captured at an extremely low light level of 1 Lux. As you can see, the Nokia 808 produces a very dark exposure, despite a larger sensor and better light capture than the comparison devices. As explained above, this result is largely caused by sub-optimal tuning of the Nokia’s image processor. The iPhone 5s captures a brighter but still noticeably underexposed image, partly to keep image noise at bay, and partly because it is Apple’s image processing philosophy to slightly underexpose dark scenes in order to maintain the atmosphere of the scene. The Samsung Galaxy S7 is the most modern device in this comparison, and thanks to better tuning and noise reduction than the Nokia and the iPhone 5s, it is capable of achieving a brighter and more usable exposure.

Let’s see how the numbers in the graph above translate into real-life image results.The samples below were captured at an extremely low light level of 1 Lux. As you can see, the Nokia 808 produces a very dark exposure, despite a larger sensor and better light capture than the comparison devices. As explained above, this result is largely caused by sub-optimal tuning of the Nokia’s image processor. The iPhone 5s captures a brighter but still noticeably underexposed image, partly to keep image noise at bay, and partly because it is Apple’s image processing philosophy to slightly underexpose dark scenes in order to maintain the atmosphere of the scene. The Samsung Galaxy S7 is the most modern device in this comparison, and thanks to better tuning and noise reduction than the Nokia and the iPhone 5s, it is capable of achieving a brighter and more usable exposure.

Thanks to better denoising, current flagship models such as the Huawei Mate 10 Pro and the Google Pixel 2 achieve noticeably brighter exposures than older smartphones. Again, Apple’s tuning causes the iPhone X to capture a slightly darker exposure than the competition in low light.

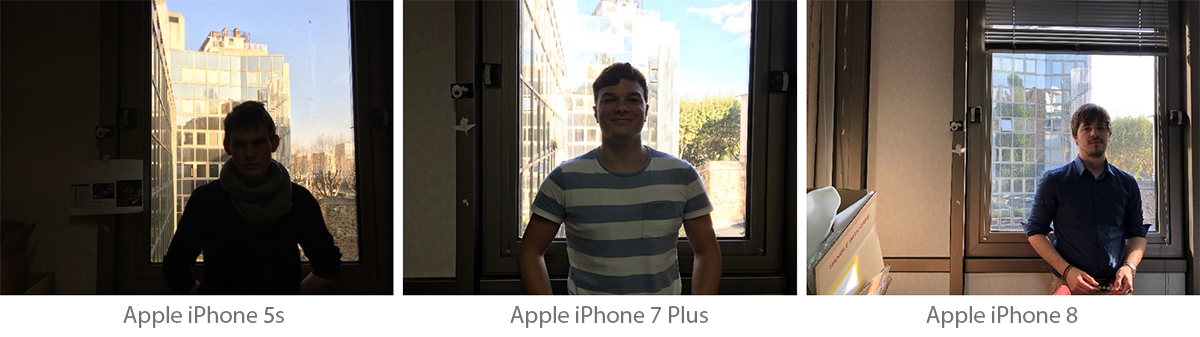

Below you can see real-life samples of a high-contrast scene. The Auto HDR feature first appeared on smartphone cameras back in 2010, but early versions weren’t very good. The iPhone 5s already came with HDR, otherwise we could not see any blue sky in the sample below at all, but the sky is more cyan than blue and the brightest areas are still clipped. Looking at the results from newer iPhone models, we can clearly see how the technology has evolved and been refined. The iPhone 7 Plus is capable of preserving the sky perfectly. In addition, the iPhone 8 Plus lifts the shadows in order to reveal some detail in the very dark foreground area.

A similar evolution can be observed on the series of backlit portraits below. Without any face detection, the iPhone 5s exposes for the background, rendering the portrait subject very dark. The 7 Plus captures noticeably better detail on the subject, but produces some overexposure on the brighter background. The newest device in this comparison, the iPhone 8 Plus, comes with the most refined HDR and face detection features, resulting in the most balanced exposure — one that shows good detail on both the portrait subject and the background.

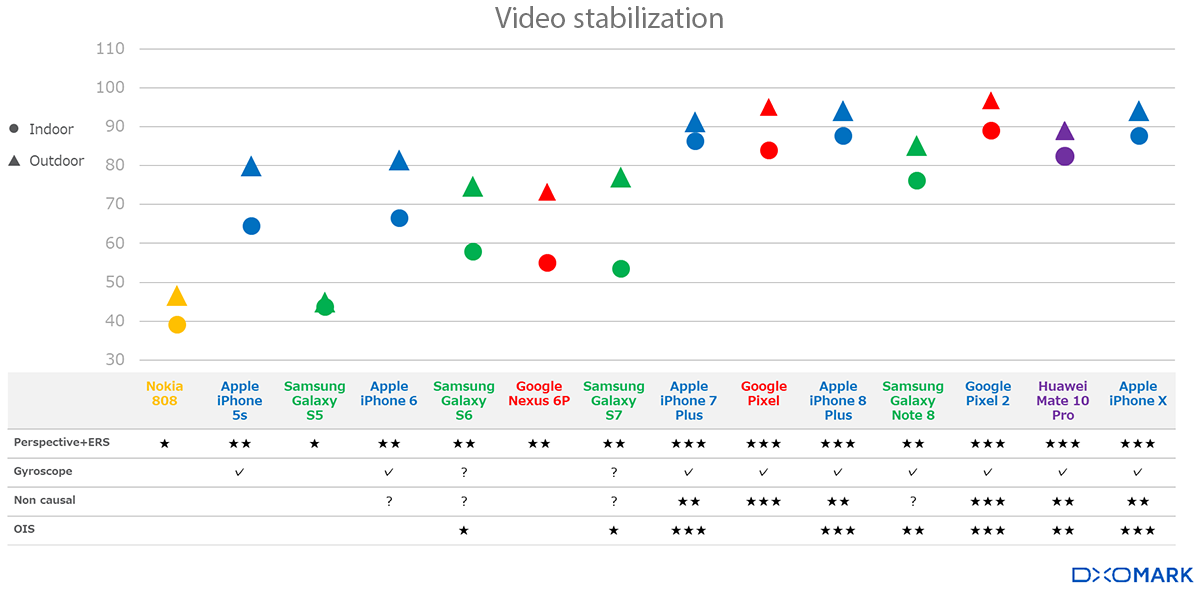

Video stabilization: Making things work together

Video stabilization is a feature that requires several smartphone components and functions to work together seamlessly for best results. On older devices such as the Nokia 808 or Samsung Galaxy S5, the feature was designed to counteract handshake only and was based purely on image processing. The algorithms analyzed the image content to detect motion, but there was a risk that the camera would lock onto a moving subject rather than stay focused on a static element in the scene.

Apple was the first manufacturer to enhance the feature by integrating gyroscope data. Synchronizing gyroscope and image sensor data is technically very challenging, but Apple was able to make things work because it had full control over all hardware and software components on its devices. By contrast, Samsung, for example, also had gyroscopes in its older devices, but was unable to use them in an efficient way for video stabilization, probably due to limitations in the Android operating system. All current high-end phones make use of gyroscope data for video stabilization.

Buffering and OIS are taking video stabilization to the next level

The next step of evolution materialized on the software side. A technology called “non-causal stabilization” uses a video buffer of approximately one second to let the stabilization system anticipate camera motion in the future. This function is combined with more significant cropping and can yield impressive steady-cam-like results, even when the photographer is walking or riding a bicycle. This kind of advanced stabilization requires the gyroscope to provide very reliable data and probably would not have been possible with the components available in 2012 or 2013.

The latest devices also add OIS to the mix. This helps reduce frame-to-frame differences in sharpness which can adversely affect the final result, especially when frame-to-frame motion is perfectly compensated. However, it also adds another layer of complexity. Samsung had a first try on its Galaxy S6 and S7 devices, but didn’t quite get things right, ending up with results that were worse than those of the OIS-less iPhones 6 and 6s. However, the Korean manufacturer has been able to make considerable improvements in the Galaxy Note 8.

Apple first managed to integrate OIS into video stabilization efficiently with the iPhone 7/7 Plus generation.

You can see below how the scores and numbers above translate into real-life performance. As the oldest device in our comparison, the Nokia 808 PureView’s video stabilization has to make do without any advanced technologies, resulting in very noticeable jerky motions and distortions when walking during video recording.

You can see below how the scores and numbers above translate into real-life performance. As the oldest device in our comparison, the Nokia 808 PureView’s video stabilization has to make do without any advanced technologies, resulting in very noticeable jerky motions and distortions when walking during video recording.

The Apple iPhone 5s was the first smartphone to make use of gyroscope data for video stabilization purposes. Thanks to the integration of this additional sensor, the sample clip is noticeably smoother and more stable than the Nokia 808’s.

After the S6 generation, Samsung’s Galaxy S7 Edge was the Korean manufacturer’s second device to deploy the camera’s optical image stabilization system for video stabilization purposes. Despite the integration of the additional system, the Samsung could not achieve any higher scores in our stabilization test than those of the iPhone 5s, however. Integrating the different hardware and software components still needed some more fine tuning.

The Google Pixel stabilization system processes each frame immediately, achieving results that are noticeably superior to the competition at the time, with very smooth, almost steady-cam-like footage. On the new Pixel 2 Google has switched to a buffer-based approach in addition to the gyroscope, resulting in even better performance.

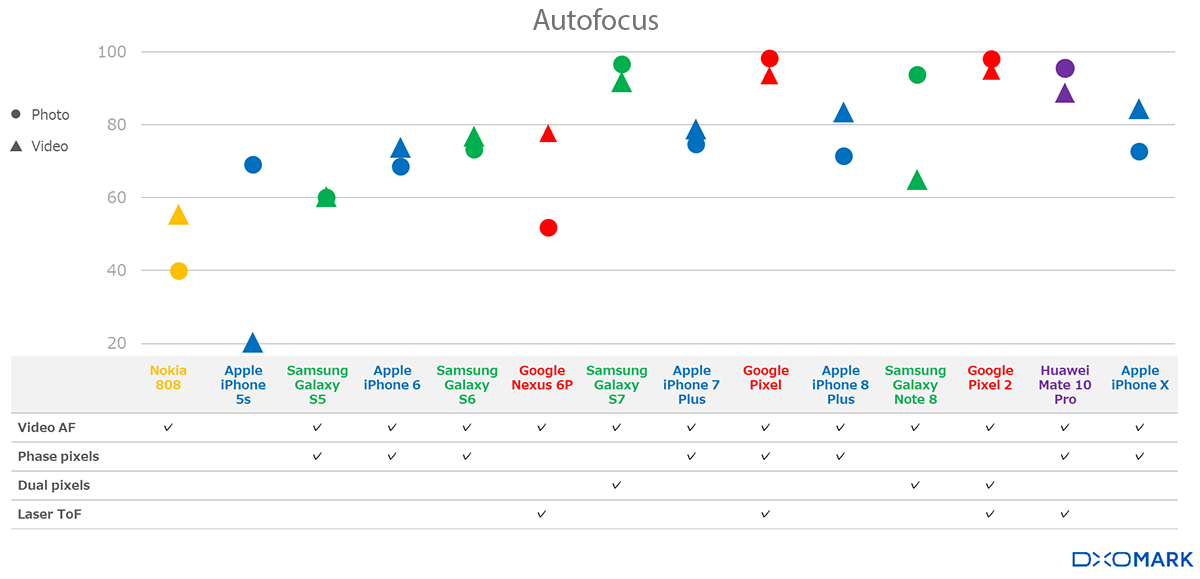

Autofocus: More sensors for better performance

Just like video stabilization, autofocus is another camera sub-system that requires several hardware and software components to work together in perfect harmony for optimal results. At DxOMark, we score the performance of both photo and video autofocus. Although they rely on the same technologies, the two types of autofocus have to work in different ways in order to produce good results. In addition to being accurate, photo autofocus should be fast. Video autofocus, on the other hand, should be stable and smooth, as any focus pumping or abrupt changes in focus will be visible in the final video.

Contrast detection comes with limitations

Pre-2015, contrast-based autofocus used to be state of the art. This autofocus method comes with several disadvantages, however. It works by observing how image contrast changes when moving the lens elements, but the system does not know when maximum contrast has been reached. The lens has to be moved beyond the best position and back again to confirm focus. Even worse, when the camera starts to focus, it does not know whether to increase or to decrease the focusing distance. Starting at a one-meter focus distance, it might focus to infinity first without finding maximum contrast, only then to switch direction and eventually find the sharpness peak at 50 cm. This is particularly problematic for video, and the reason Apple decided it was best for the iPhone 5s not to focus at all during video recording. Instead, the device locks focus at the start of recording, resulting in a very low video autofocus score in our testing.

In 2015, sensor manufacturers added phase detection (PDAF) to the mix by managing to integrate dedicated phase-detection sensors right onto the pixel matrix of the image sensor. The Samsung Galaxy S5 was the first smartphone to feature such a sensor, followed by the iPhone 6. Apple’s implementation performed significantly better, with the iPhone 6 setting a new autofocus benchmark. PDAF does not work well in low-light conditions, however, and the iPhone 6’s low-light test results were actually worse than those of its predecessor, the 5s. A year later, Apple took a more pragmatic approach to the iPhone 6s and switched to contrast AF for low light, which helped improve the autofocus score. Samsung also improved things noticeably with a better PDAF integration in the Galaxy S6.

Dual-pixel sensors and time-of-flight lasers offer improved AF speed

Some newer devices use even more advanced systems to improve autofocus performance. The Samsung Galaxy S7 and the Google Pixel, for example, are among the best we’ve tested and outperform all current iPhone models. They manage to do so in different ways, though. The Galaxy S7 uses so-called dual pixels. All 12 million pixels on its image sensor act as phase pixels, making the system much less susceptible to noise and more suitable for low-light conditions. This technology first appeared in 2013 in Canon DSLRs. Samsung developed its dual-pixel smartphone sensor in-house, gaining a real advantage over the competition in 2016.

Google did not have access to a dual-pixel sensor when it launched the original Pixel in 2016. Instead, its engineers used a laser time-of-flight sensor to estimate subject distance, as they had already done on the Nexus 6P. The technology is very good at short distances and in low light, but struggles in bright conditions and fails at long distances. However, by combining a time-of-flight sensor with phase-detection, Google was able to obtain impressive results. The Pixel 2 adds a dual-pixel sensor, elevating autofocus performance to an even higher level. Apple’s iPhones, on the other hand, still rely on very few PDAF sensors, as dual pixels are not currently available for the small sensor sizes in iPhones, resulting in lower autofocus scores for both photo and video.

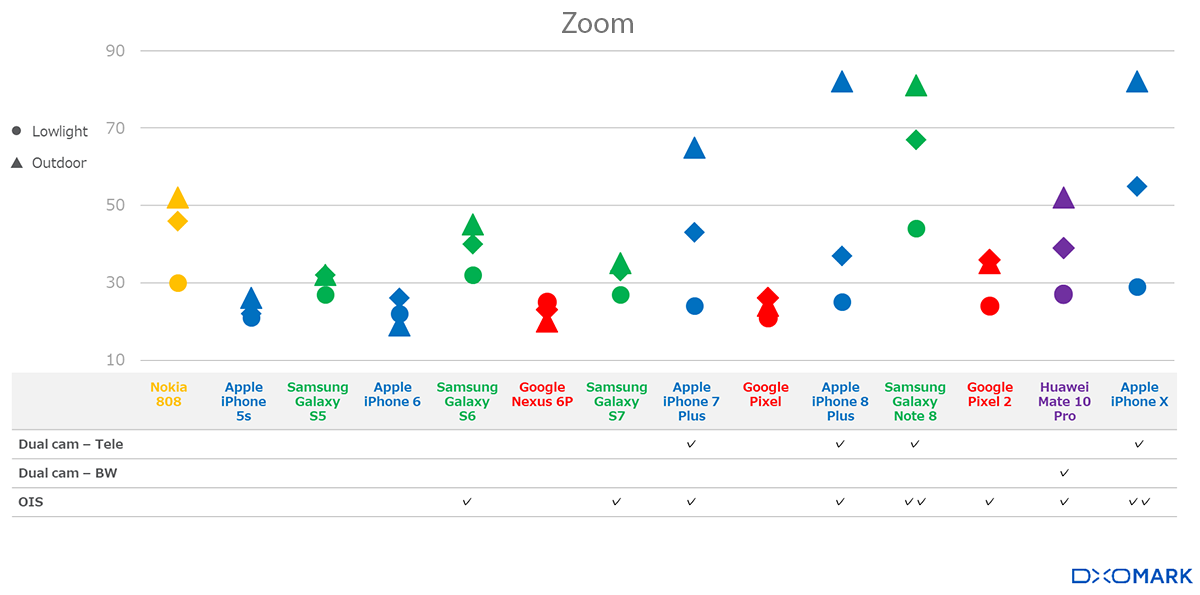

Zoom: Dual-cameras make the difference

Zooming is one of the very few areas where smartphones still have a noticeable disadvantage compared to conventional digital compact cameras. As the thin bodies of high-end smartphones do not offer enough space for the implementation of conventional telescope-type zoom lenses, smartphone cameras have been limited to digital zooming for a long time. However, usable results are possible even without any advanced zooming methods. The Nokia 808 is still one of the best-performing zoom cameras in indoor conditions, thanks to its large sensor and high 41 Mp pixel count. The Samsung Galaxy S6 is also able to achieve decent results, especially in lower light, thanks to a 16 Mp sensor and OIS.

The introduction of dual-cameras with secondary tele-lenses made a real difference to smartphone zoom performance, but the technology is still new and evolving. The iPhone 7 Plus was Apple’s first dual-camera phone and achieves good results in bright light. However, the improvement over the previous iPhones and especially over the single-lens iPhone 7 in low light and indoors is marginal, as Apple deems the image quality of the zoom lens in such light conditions not good enough. The 7 Plus therefore switches to digitally zooming the main camera in low light. You can read a more detailed analysis of the differences between the iPhone 7 and 7 Plus by clicking here.

Dual-cameras can boost zoom performance in different ways

The iPhone 8 Plus improved only slightly on the 7 Plus’s zoom performance in lower light, but thanks to a stabilized tele-lens, the current flagship iPhone X has been able to make a real step forward. Using similar technologies, Samsung’s Galaxy Note 8 is currently our top-performing device for zoom.

Chinese manufacturer Huawei is taking an entirely different approach to its rivals Apple and Samsung: instead of a longer secondary lens, it uses image data from the Mate 10 Pro’s secondary 20 Mp black-and-white sensor for zooming. The end results cannot quite match those of the latest iPhones nor of the Note 8, but are significantly better than those of a single-lens device such as the Google Pixel 2.

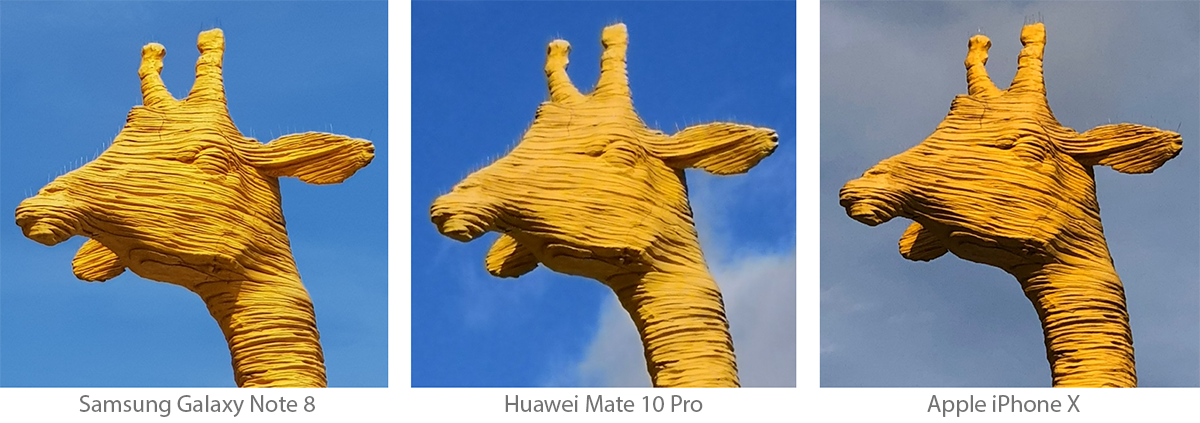

In the samples below, we can see how the scores from our chart translate into real-life image results. The Samsung Galaxy is currently our best performer in all light conditions, although the Apple iPhone X is not far off. Using a secondary high-resolution black-and-white sensor instead of a tele-lens with longer focal lengths, the Huawei Mate 10 Pro cannot quite match the Apple and Samsung, but is noticeably ahead of such single-lens devices as the Google Pixel 2 and older smartphones.

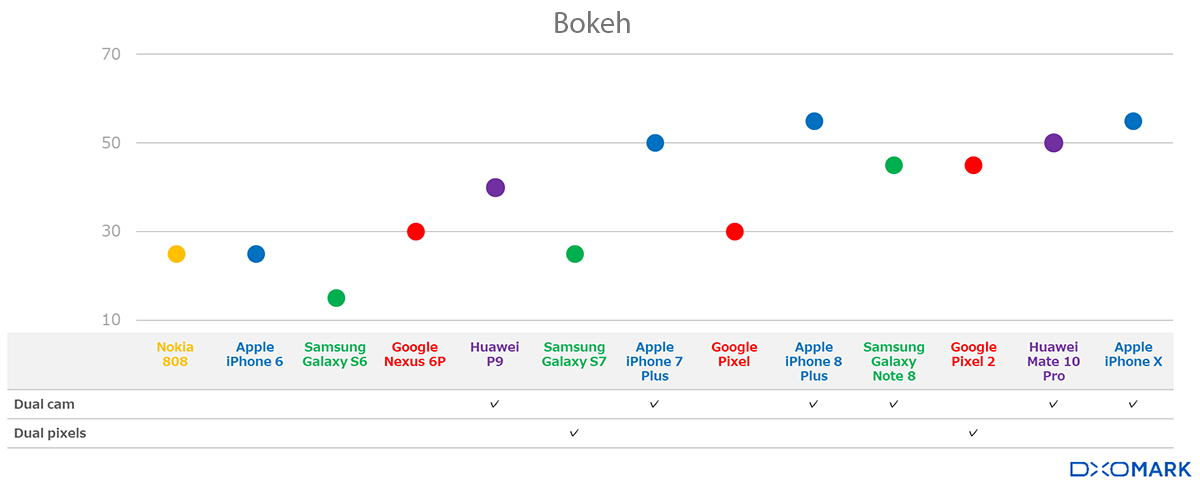

Bokeh

Due to small image sensors and the laws of physics, conventional smartphone cameras have almost indefinite depth of field. To simulate the shallow depth of field of a fast lens on a DSLR, manufacturers have developed “fake bokeh” modes. Some call it portrait mode, others call it depth mode, but all of them are designed to do the same thing: sharply capture foreground subjects while smoothly blurring the background.

The oldest devices in our comparison graph below — the Nokia 808 and the iPhone 5s — did not have a bokeh mode at all. Usually a device without such a function achieves a baseline score of 25 points in our bokeh test. The Samsung Galaxy S6, one of the first big-brand devices to feature a bokeh mode, actually scored lower than that, simply because its purely software-based focus-stacking implementation was so bad that most users would prefer a standard image.

The Google Nexus 6P required the user to move the device slightly upwards during capture in order to create a depth-map of the scene. The results were an improvement over the S6, but still displayed many subject-isolation artifacts. The Huawei P9 is the first device in our list to employ dual-camera technology for bokeh simulation. The slightly offset position of the dual lenses allows for better depth estimation than previous methods of the function.

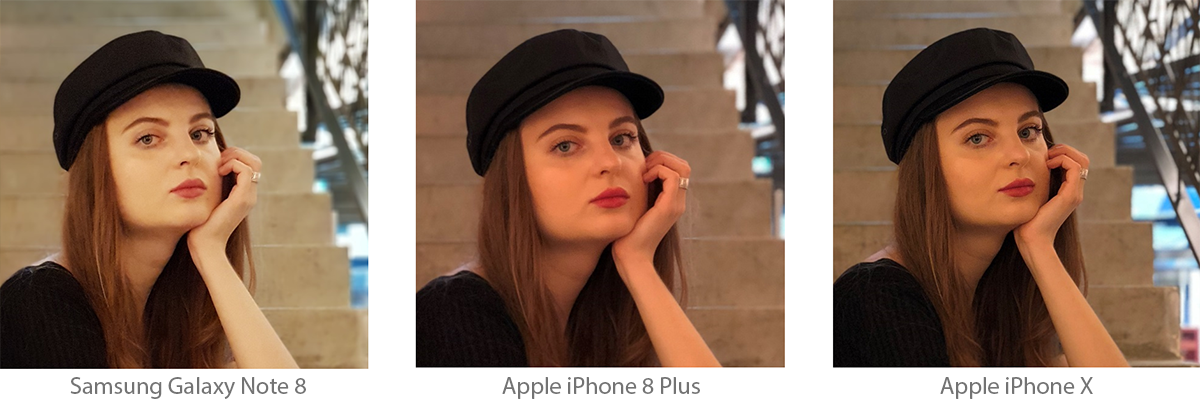

Out of the current crop of high-end devices, the Apple iPhone 8 Plus and iPhone X produce the best results with their portrait mode, displaying good subject isolation and smooth natural blur and transition. The X is just a touch better, with lower noise levels in indoor and low-light conditions — and this is especially noticeable on skin tones. The Google Pixel 2 and Samsung Galaxy Note 8 follow closely behind with very similar scores, but those scores are generated in different ways. The best images out of the Note 8 produce a more natural effect than the Pixel 2, with better subject isolation and fewer artifacts. However, the Note 8 has some trouble with repeatability and the bokeh mode does not always kick in when it should. In contrast to the dual-camera Note 8, the Pixel 2 uses the dual pixels of its sensors in combination with machine learning-based algorithms for depth estimation. The image results are not quite as good as the Note 8’s best images, but its portrait mode is consistent.

Improved depth estimation leads to better bokeh effects

Thanks to its dual-camera setup, the Huawei Mate 10 Pro is also capable of creating good subject isolation and pleasant blur, but with its secondary camera featuring the same wide-angle lens as the main camera, it cannot quite capture the same portrait-perspective as the Apple cameras and the Note 8’s (each equipped with an approximately 50mm-equivalent secondary lens). Instead, the Huawei’s depth-mode shoots images with a field of view that is closer to a 35mm equivalent — not really a typical portrait focal length.

Let’s have a look at a few samples again. You can see below the progress that bokeh-mode has made since its first appearance on mobile devices. The Nexus 6P captures a series of images while the camera is moved slightly upwards. The resulting depth-maps tend to show a range of errors (indicated by the red arrows). The same is true for the Samsung Galaxy S7, which uses a focus-stacking approach — taking several shots at different focus settings in quick succession and then merging the results. The Huawei P9 was one of the earlier dual-camera phones to offer bokeh mode. It’s better at estimating the depth of a scene than the Google and the Samsung, but still far from perfect.

Current dual-cam-equipped high-end phones are much better at creating depth-maps than previous generations. In the samples below, you can see that all the objects in the focal plane are sharp, while even small enclosed background areas are correctly blurred on the Samsung Galaxy Note 8, the Huawei Mate 10 Pro, and the Apple iPhone X.

In the samples below, we can see that the same phones are capable of producing very good results in real-world bright light conditions. Images show good subject isolation, smooth blur transitions and a pleasant and natural amount of background blur. However, there is still room for improvement in terms of low-light performance. In dim conditions, all images show high levels of luminance noise which is especially noticeable on the skin tones in portraits.

Outlook: Greater integration for better images

Looking at the past 5 years of smartphone camera development, we can see that camera hardware and image processing are evolving alongside each other and at a much faster pace than in the “traditional” camera sector. DSLRs and mirrorless system cameras are still clearly ahead in some areas — for example, auto exposure, but in terms of image processing, Canon, Nikon, Pentax and the other players in the DSC market are behind what Apple, Samsung, Google, and Huawei can do. Thanks to their hardware advantages, the larger cameras don’t actually need the same level of pixel processing as smartphones to produce great images, but there is no denying that the performance gap between smartphones and DSLRs is narrowing.

In the coming years we will likely see more 3D-sensing components on smartphone cameras, such as time-of-flight or dual-pixel sensors, as they can help further improve computational bokeh effects, portrait perspective, face lighting, and other image characteristics that benefit from depth-data. With the Light L16 camera paving the way for multiple-focal-length camera architecture, it would not be a surprise seeing smartphone manufacturers going down the same road as well. With a mix of Bayer and black-and-white sensors, such multi-camera devices could focus on texture and noise or provide more user flexibility through specialized lenses such as telephoto, super-wide-angle, and fisheye. Efficient algorithms for fusing data from all these different components and sensors will be a key element in the imaging pipeline.

In short, there will be challenges ahead, but looking at the current crop of high-end mobile products, manufacturers are well-equipped to take them on and lift smartphone image quality and camera performance to new heights in the near future.

If you would like to dive deeper into the reviews and scoring of individual smartphone cameras, please find below a list of links to the reviews for all devices mentioned in this article:

- Nokia 808 PureView

- Apple iPhone 5s

- Samsung Galaxy S5

- Apple iPhone 6

- Samsung Galaxy S6 Edge

- Google Nexus 6P

- Samsung Galaxy S7 Edge

- Apple iPhone 7 Plus

- Google Pixel

- Apple iPhone 8 Plus

- Samsung Galaxy Note 8

- Google Pixel 2

- Huawei Mate 10 Pro

- Apple iPhone X

DXOMARK encourages its readers to share comments on the articles. To read or post comments, Disqus cookies are required. Change your Cookies Preferences and read more about our Comment Policy.